Code

!pip install sidetable -qBy Sasha Fridman

We aim to reveal key drivers of sales and revenues of our online store.

While the business has overall proven to be profitable, there is a need to identify products’ characteristics and sales patterns that contribute significantly to business growth, as well as those that may have a negative impact.

Note: This analysis is complemented by an interactive dashboard for data exploration and a presentation summarizing key findings for stakeholders. Click below to access each component:

The dataset contains sales entries of an online store that sells household goods.

The file ecommerce_dataset_us.csv contains the following columns:

InvoiceNo — order identifierStockCode — item identifierDescription — item nameQuantity— quantity of itemsInvoiceDate — order dateUnitPrice — price per itemCustomerID — customer identifierTransaction-related terms

“Entry” (or “purchase”) - represents a single line in our dataset - one specific product being bought. While technically these are “entries” in our data, we often use the word “purchase” in more natural contexts. Each entry includes details like stock code, quantity, unit price, and invoice number.

“Invoice” (or “order”) - a group of entries representing a single transaction. An invoice can contain one or several entries (commonly, different products) purchased by the same customer at the same time.

In essence, each invoice represents a complete order, while entries show us purchases of individual products within that order. Technically (assuming no missing invoice numbers), counting unique invoice numbers (“nunique”) gives us the total number of orders, while counting all invoice entries (“count”) gives us the total number of individual product purchases.

“Mutually exclusive entries” - these are pairs of entries where a customer makes and then returns the same purchase, with matching quantity, price, and stock code, but opposite signs for quantity and revenue. Some return scenarios (like partial returns or price differences) may not be captured by this definition. We have developed an approach for handling such cases, which will be explained and applied later in the Distribution Analysis section of the project.

“Returns” - are defined as negative quantity entries from mutually exclusive entries. The overall return volume might be slightly larger, as some returns could have been processed outside our defined return identification rules (for example, when a customer buys and returns the same product but at a different price or quantity).

“Operation” (or “operational entry”) - an entry that represents non-product sales activity, like delivery, marketplace-related entries, service charges, or inventory adjustments (description examples: “POSTAGE”, “Amazon Adjustment”, “Bank Charges”, “damages”). We will analyze these cases and their impact, but exclude them from our product range analysis when they add noise without meaningful insights.

General terms

“Sales volume” (or “purchases volume”) - we will use these terms to refer to quantity of units sold, not revenue generated from purchases.

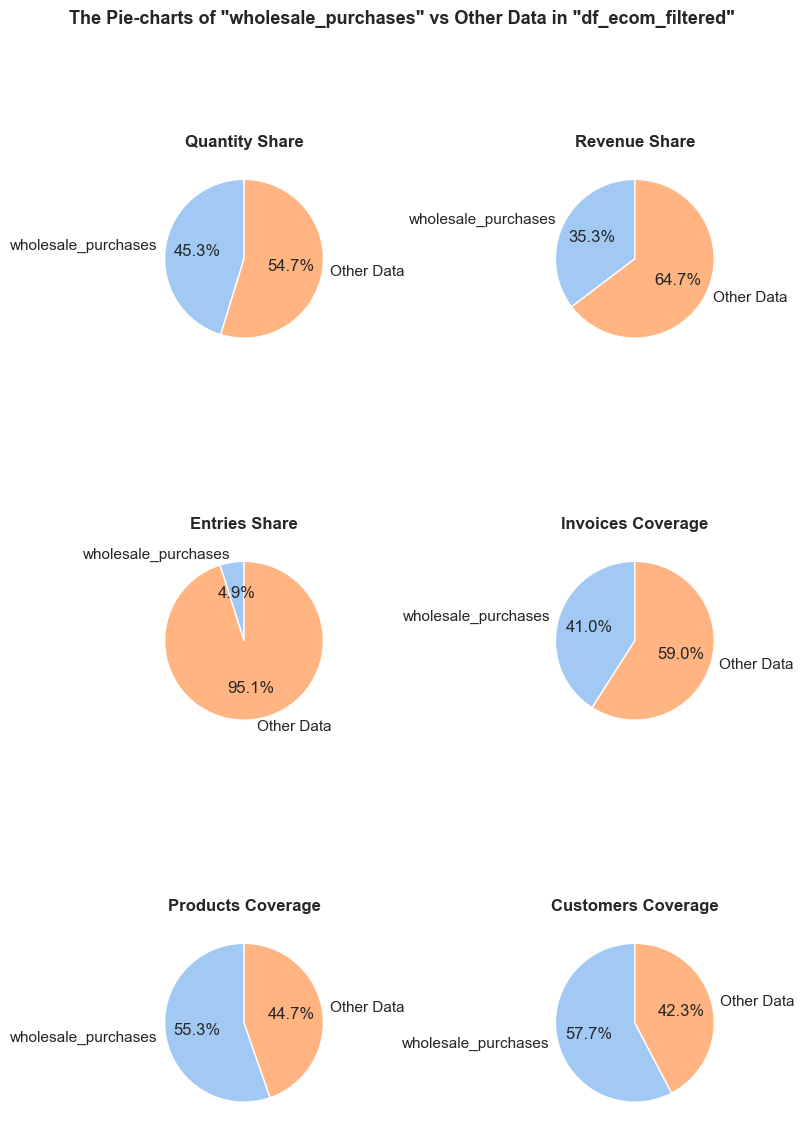

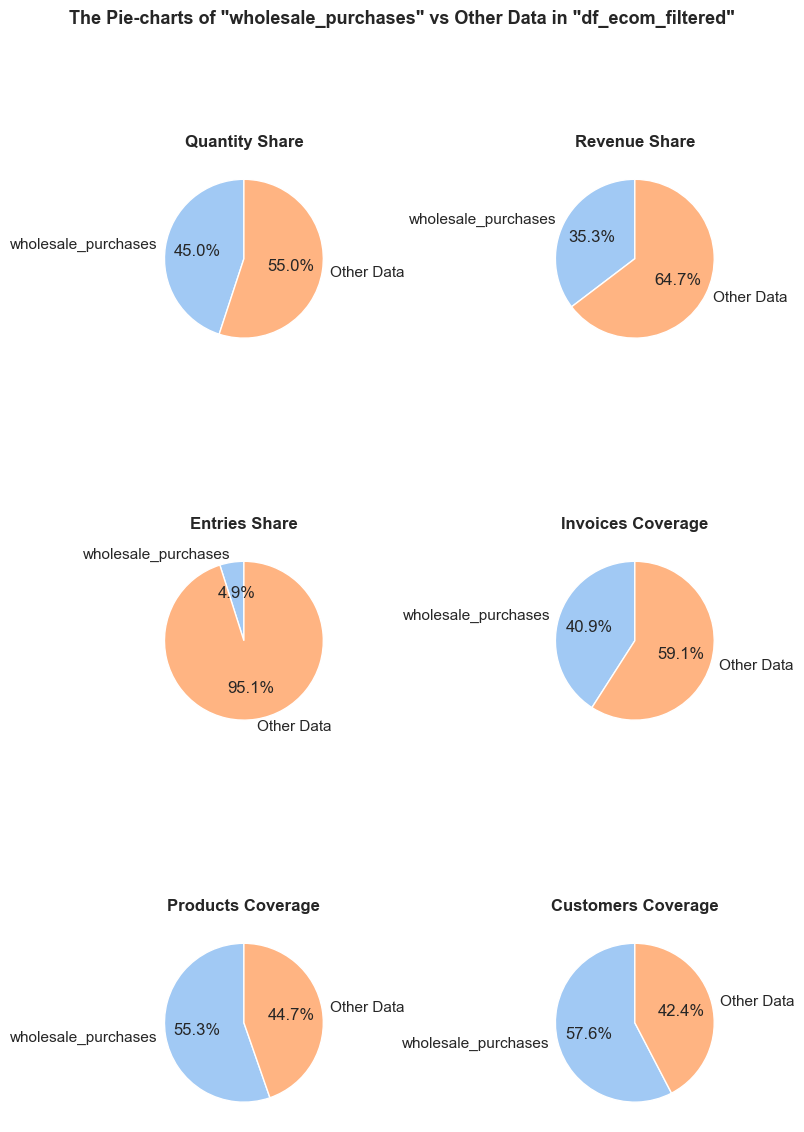

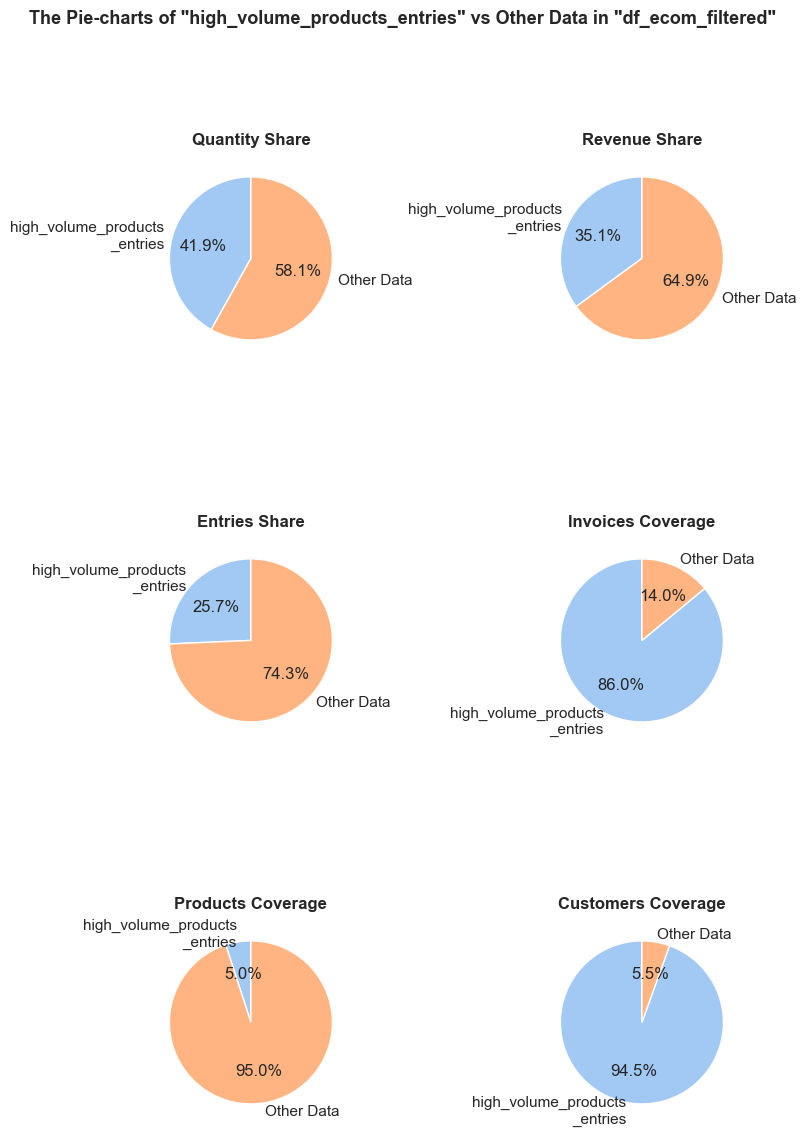

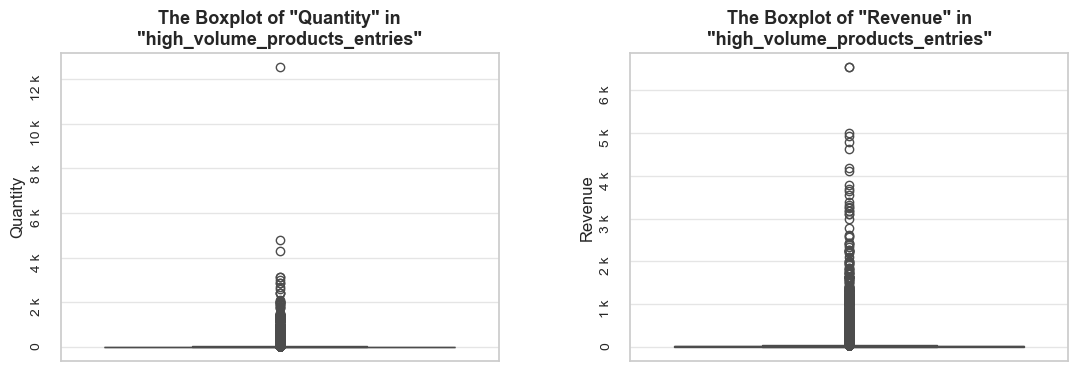

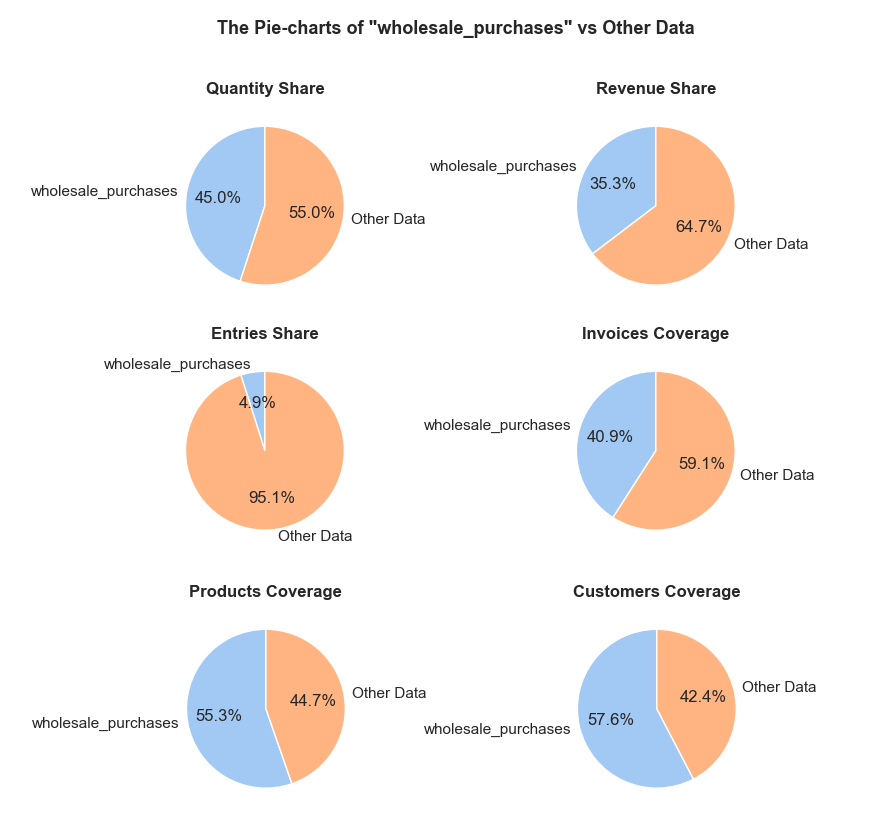

“Wholesale purchases” - are defined as entries (individual product purchases) where the quantity falls within the top 5% of all entries.

“High-volume products” - are defined as products whose purchases volume (sum of quantities across all entries) falls within the top 5% of all products.

“High-volume customers” - are defined as customers whose purchases volume (sum of quantities across all entries) falls within the top 5% of all customers.

“Expensive products” - are defined as products whose *median unit price per entry falls within the top 5% of all products’ median unit prices.

“Cheap products” - are defined as products whose *median unit price per entry falls within the bottom 5% of all products’ median unit prices.

“New products” - are defined as products that experienced sales in the last three months of our dataset, but never before.

*Note: Here we use medians, since they better than means represent typical values for non-normal distributions, that has been proven to be the case in our study.

“IQR (Interquartile Range)” - the range between the first quartile (25th percentile) and third quartile (75th percentile) of the data. In our analysis, we will primarily use IQR for outliers detection.

💡 - An important insight relevant to this specific part of the study.

💡💡 - A key insight with significant implications for the entire project.

⚠ - Information requiring special attention (e.g., major clarifications or decision explanations), as it may impact further analysis.

Additional clarifications with more local relevance are preceded by the bold word “Note” and/or highlighted in italics.

!pip install sidetable -q# data manipulation libraries

import pandas as pd

import numpy as np

import scipy.stats as stats

import sidetable

# date and time handling

from datetime import datetime, timedelta

import calendar

# visualization libraries

import matplotlib.pyplot as plt

import matplotlib.ticker as ticker

from matplotlib.ticker import ScalarFormatter, EngFormatter

import seaborn as sns

import plotly.express as px

import plotly.graph_objects as go

from plotly.subplots import make_subplots

# statistical and language processing libraries

import math

import re

import nltk

from nltk.corpus import wordnet as wn

from nltk.corpus import stopwords

# Matplotlib and Seaborn visualization configuration

plt.style.use('seaborn-v0_8') # more attractive styling

plt.rcParams.update({

'figure.figsize': (12, 7),

'grid.alpha': 0.5,

'grid.linestyle': '--',

'font.size': 10,

'axes.titlesize': 14,

'axes.labelsize': 10})

sns.set_theme(style="whitegrid", palette="deep")

# Pandas display options

pd.set_option('display.max_columns', None)

table_width = 150

pd.set_option('display.width', table_width)

col_width = 40

pd.set_option('display.max_colwidth', col_width)

#pd.set_option('display.precision', 2)

pd.set_option('display.float_format', '{:.2f}'.format) # displaying normal numbers instead of scientific notation

# Python and Jupyter/IPython utility libraries and settings

import warnings

warnings.filterwarnings('ignore', category=FutureWarning)

warnings.filterwarnings('ignore', category=UserWarning)

from IPython.core.interactiveshell import InteractiveShell

InteractiveShell.ast_node_interactivity = 'all' # notebook enhanced output

from IPython.display import display, HTML, Markdown # broader options for text formatting and displaying

import textwrap # for formatting and wrapping text (e.g. to manage long strings in outputs)# loading the data file into a DataFrame

try:

df_ecom = pd.read_csv('C:/Users/4from/Desktop/Practicum/13. Final project/datasets/ecommerce_dataset_us.csv', sep='\t')

except:

df_ecom = pd.read_csv('/datasets/ecommerce_dataset_us.csv', sep='\t')

Let’s enhance efficiency of our further analysis by creating two functions: get_df_name and data_inspection.

Function: get_df_name

The get_df_name function retrieves and returns the name of a DataFrame variable as a string, what will be handy for displaying information explicitly by other functions.

def get_df_name(df):

"""

The function returns the user-defined name of the DataFrame variable as a string.

Input: the DataFrame whose name must be extracted.

Output: the name of the DataFrame.

"""

for name, value in globals().items():

if value is df:

if not name.startswith('_'): # excluding internal names

return name

return "name not found"Function: data_inspection

The data_inspection function performs comprehensive inspections of a given DataFrame. It provides insights into the dataset’s structure, including concise summaries, examples, descriptive statistics, categorical parameter statistics, missing values, and duplicates.

def data_inspection(df, show_example=True, example_type='head', example_limit=5, frame_len=120):

"""

The function performs various data inspections on a given DataFrame.

As input it takes:

- df: a DataFrame to be evaluated.

- show_example (bool, optional): whether to display examples of the DataFrame. By default - True.

- example_type (str, optional): type of examples to display ('sample', 'head', 'tail'). By default - 'head'.

- example_limit (int, optional): maximum number of examples to display. By default - 5.

- frame_len (int, optional): the length of frame of printed outputs. Default - 40.

- frame_len (int, optional): the length of frame of printed outputs. Default - 40. If `show_example` is True, frame_len is set to minimum of the values: manually set `frame_len` and `table_width (which is defined at the project initiation stage).

As output it presents:

- Displays concise summary.

- Displays examples of the `df` DataFrame (if `show_example` is True)

- Displays descriptive statistics.

- Displays descriptive statistics for categorical parameters.

- Displays information on missing values.

- Displays information on dublicates.

"""

# adjusting output frame; "table_width" is set at project initiation stage

frame_len = min(table_width, frame_len) if show_example else frame_len

# retrieving a name of the DataFrame

df_name = get_df_name(df)

# calculating figures on duplicates

dupl_number = df.duplicated().sum()

dupl_share = round(df.duplicated().mean()*100, 1)

# displaying information about the DataFrame

print('='*frame_len)

display(Markdown(f'**Overview of `{df_name}`:**'))

print('-'*frame_len)

print(f'\033[1mConcise summary:\033[0m')

print(df.info(), '\n')

if show_example:

print('-'*frame_len)

example_messages = {'sample': 'Random examples', 'head': 'Top rows', 'tail': 'Bottom rows'}

example_methods = {'sample': df.sample, 'head': df.head, 'tail': df.tail}

message = example_messages.get(example_type)

method = example_methods.get(example_type)

print(f'\033[1m{message}:\033[0m')

print(method(min(example_limit, len(df))), '\n')

print('-'*frame_len)

print(f'\033[1mDescriptive statistics:\033[0m')

print(df.describe(), '\n')

print('-'*frame_len)

print(f'\033[1mDescriptive statistics of categorical parameters:\033[0m')

print(df.describe(include=['object']), '\n') # printing descriptive statistics for categorical parameters

print('-'*frame_len)

print(f'\033[1mMissing values:\033[0m')

display(df.stb.missing(style=True))

print('-'*frame_len)

print(f'\033[1mNumber of duplicates\033[0m: {dupl_number} ({dupl_share :.1f}% of all entries)\n')

print('='*frame_len)data_inspection(df_ecom, show_example=True, example_type='sample', example_limit=5)========================================================================================================================Overview of df_ecom:

------------------------------------------------------------------------------------------------------------------------ Concise summary: <class 'pandas.core.frame.DataFrame'> RangeIndex: 541909 entries, 0 to 541908 Data columns (total 7 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 InvoiceNo 541909 non-null object 1 StockCode 541909 non-null object 2 Description 540455 non-null object 3 Quantity 541909 non-null int64 4 InvoiceDate 541909 non-null object 5 UnitPrice 541909 non-null float64 6 CustomerID 406829 non-null float64 dtypes: float64(2), int64(1), object(4) memory usage: 28.9+ MB None ------------------------------------------------------------------------------------------------------------------------ Random examples: InvoiceNo StockCode Description Quantity InvoiceDate UnitPrice CustomerID 146782 C549019 22230 JIGSAW TREE WITH WATERING CAN -2 04/03/2019 15:22 0.85 12474.00 471427 576644 23199 JUMBO BAG APPLES 14 11/14/2019 10:01 4.13 NaN 31173 538902 21879 HEARTS GIFT TAPE 24 12/13/2018 10:04 0.19 14150.00 497667 578445 22355 CHARLOTTE BAG SUKI DESIGN 10 11/22/2019 12:25 0.85 15005.00 133553 547788 22602 RETROSPOT WOODEN HEART DECORATION 2 03/23/2019 12:00 1.63 NaN ------------------------------------------------------------------------------------------------------------------------ Descriptive statistics: Quantity UnitPrice CustomerID count 541909.00 541909.00 406829.00 mean 9.55 4.61 15287.69 std 218.08 96.76 1713.60 min -80995.00 -11062.06 12346.00 25% 1.00 1.25 13953.00 50% 3.00 2.08 15152.00 75% 10.00 4.13 16791.00 max 80995.00 38970.00 18287.00 ------------------------------------------------------------------------------------------------------------------------ Descriptive statistics of categorical parameters: InvoiceNo StockCode Description InvoiceDate count 541909 541909 540455 541909 unique 25900 4070 4223 23260 top 573585 85123A WHITE HANGING HEART T-LIGHT HOLDER 10/29/2019 14:41 freq 1114 2313 2369 1114 ------------------------------------------------------------------------------------------------------------------------ Missing values:

| missing | total | percent | |

|---|---|---|---|

| CustomerID | 135,080 | 541,909 | 24.93% |

| Description | 1,454 | 541,909 | 0.27% |

| InvoiceNo | 0 | 541,909 | 0.00% |

| StockCode | 0 | 541,909 | 0.00% |

| Quantity | 0 | 541,909 | 0.00% |

| InvoiceDate | 0 | 541,909 | 0.00% |

| UnitPrice | 0 | 541,909 | 0.00% |

------------------------------------------------------------------------------------------------------------------------

Number of duplicates: 5268 (1.0% of all entries)

========================================================================================================================

# checking the dataset scope

columns = ['CustomerID', 'Description', 'StockCode', 'InvoiceNo']

first_invoice_day = pd.to_datetime(df_ecom['InvoiceDate']).min().date()

last_invoice_day = pd.to_datetime(df_ecom['InvoiceDate']).max().date()

total_period = (last_invoice_day - first_invoice_day).days

print('='*60)

display(Markdown(f'**The scope of `df_ecom`:**'))

print('-'*60)

print(f'\033[1mNumber of unique values:\033[0m')

for column in columns:

print(f' \033[1m`{column}`\033[0m - {df_ecom[column].nunique()}')

print('-'*60)

print(f'\033[1mEntries (purchases) per invoice:\033[0m\

mean - {df_ecom.groupby("InvoiceNo").size().mean() :0.1f},\

median - {df_ecom.groupby("InvoiceNo").size().median() :0.1f}')

print(f'\033[1mInvoices (orders) per customer:\033[0m\

mean - {df_ecom.groupby("CustomerID")["InvoiceNo"].nunique().mean() :0.1f},\

median - {df_ecom.groupby("CustomerID")["InvoiceNo"].nunique().median() :0.1f}')

print('-'*60)

print(f'\033[1mOverall period:\033[0m\

{first_invoice_day} - {last_invoice_day}, {total_period} days in total')

print('='*60)============================================================The scope of df_ecom:

------------------------------------------------------------ Number of unique values: `CustomerID` - 4372 `Description` - 4223 `StockCode` - 4070 `InvoiceNo` - 25900 ------------------------------------------------------------ Entries (purchases) per invoice: mean - 20.9, median - 10.0 Invoices (orders) per customer: mean - 5.1, median - 3.0 ------------------------------------------------------------ Overall period: 2018-11-29 - 2019-12-07, 373 days in total ============================================================

Let’s examine temporal consistency of invoices by ensuring each invoice has only one concrete timestamp.

# checking whether all the invoices are associated with an only one timestamp

invoices_dates = df_ecom.groupby('InvoiceNo').agg(

unique_dates_number = ('InvoiceDate', 'nunique'),

unique_dates = ('InvoiceDate', 'unique')

).reset_index().sort_values(by='unique_dates_number', ascending=False)

invoices_dates['unique_dates_number'].value_counts()

# filtering invoices with multiple timestamps

invoices_multiple_dates = invoices_dates.query('unique_dates_number > 1')

invoices_multiple_dates.sample(3)unique_dates_number

1 25857

2 43

Name: count, dtype: int64| InvoiceNo | unique_dates_number | unique_dates | |

|---|---|---|---|

| 19330 | 576057 | 2 | [11/11/2019 15:05, 11/11/2019 15:06] |

| 6684 | 550320 | 2 | [04/15/2019 12:37, 04/15/2019 12:38] |

| 1788 | 540185 | 2 | [01/03/2019 13:40, 01/03/2019 13:41] |

# adding a column displaying time difference between timestamps (for rare cases with 2 timestamps, normally there's only 1)

invoices_multiple_dates = invoices_multiple_dates.copy() # avoiding SettingWithCopyWarning

invoices_multiple_dates['days_delta'] = invoices_multiple_dates['unique_dates'].apply(

lambda x: pd.to_datetime(x[1]) - pd.to_datetime(x[0]))

# checking the result

invoices_multiple_dates.sample(3)

invoices_multiple_dates['days_delta'].describe()| InvoiceNo | unique_dates_number | unique_dates | days_delta | |

|---|---|---|---|---|

| 10527 | 558086 | 2 | [06/24/2019 11:58, 06/24/2019 11:59] | 0 days 00:01:00 |

| 8212 | 553375 | 2 | [05/14/2019 14:52, 05/14/2019 14:53] | 0 days 00:01:00 |

| 1788 | 540185 | 2 | [01/03/2019 13:40, 01/03/2019 13:41] | 0 days 00:01:00 |

count 43

mean 0 days 00:01:00

std 0 days 00:00:00

min 0 days 00:01:00

25% 0 days 00:01:00

50% 0 days 00:01:00

75% 0 days 00:01:00

max 0 days 00:01:00

Name: days_delta, dtype: objectObservations

InvoiceNo is of an object type. If possible, it should be converted to integer type.InvoiceDate is of an object type. It should be converted to datetime format.CustomerID is of a float type. It should be converted to string type (there’s no need for calculations with customer IDs, and keeping them in numeric format may affect further visualizations.)Quantity and UnitPrice columns. Further investigation is needed to understand and address these anomalies.CustomerID column has 25% missing values and the Description column has 0.3% missing values.Description) slightly exceeds that of stock codes (StockCode). It could be an indication of multiple-descriptions under same stock codes, probably non-product related descriptions as well. We will check this phenomenon in our next steps.Let’s enhance efficiency of our further analysis by developing two practical functions: data_reduction and share_evaluation. Considering that we will view long names on compact charts in our subsequent study, an extra wrap_text function will be useful to ensure a neat appearance.

Function: data_reduction

The function simplifies the process of filtering data based on a specified operation. This operation can be any callable function or lambda function that reduces the DataFrame according to specific criteria. The function tells us how many entries were removed and returns the reduced DataFrame.

def data_reduction(df, operation):

"""

The function reduces data based on the specified operation and provides number of cleaned out entries.

As input it takes:

- df (DataFrame): a DataFrame to be reduced.

- operation: a lambda function that performs the reduction operation on the DataFrame.

As output it presents:

- Displays a number of cleaned out entries.

- Returns a reduced DataFrame.

----------------

Example of usage (for excluding entries with negative quantities):

"cleaned_df = data_reduction(innitial_df, lambda df: df.query('quantity >= 0'))"

----------------

"""

entries_before = len(df)

try:

reduced_df = operation(df)

except Exception as error_message:

print(f"\033[1;31mError during data reduction:\033[0m {error_message}")

return df

entries_after = len(reduced_df)

cleaned_out_entries = entries_before - entries_after

cleaned_out_share = (entries_before - entries_after) / entries_before * 100

print(f'\033[1mNumber of entries cleaned out from the "{get_df_name(df)}":'

f'\033[0m {cleaned_out_entries} ({cleaned_out_share:0.1f}%)')

return reduced_dfFunction: share_evaluation

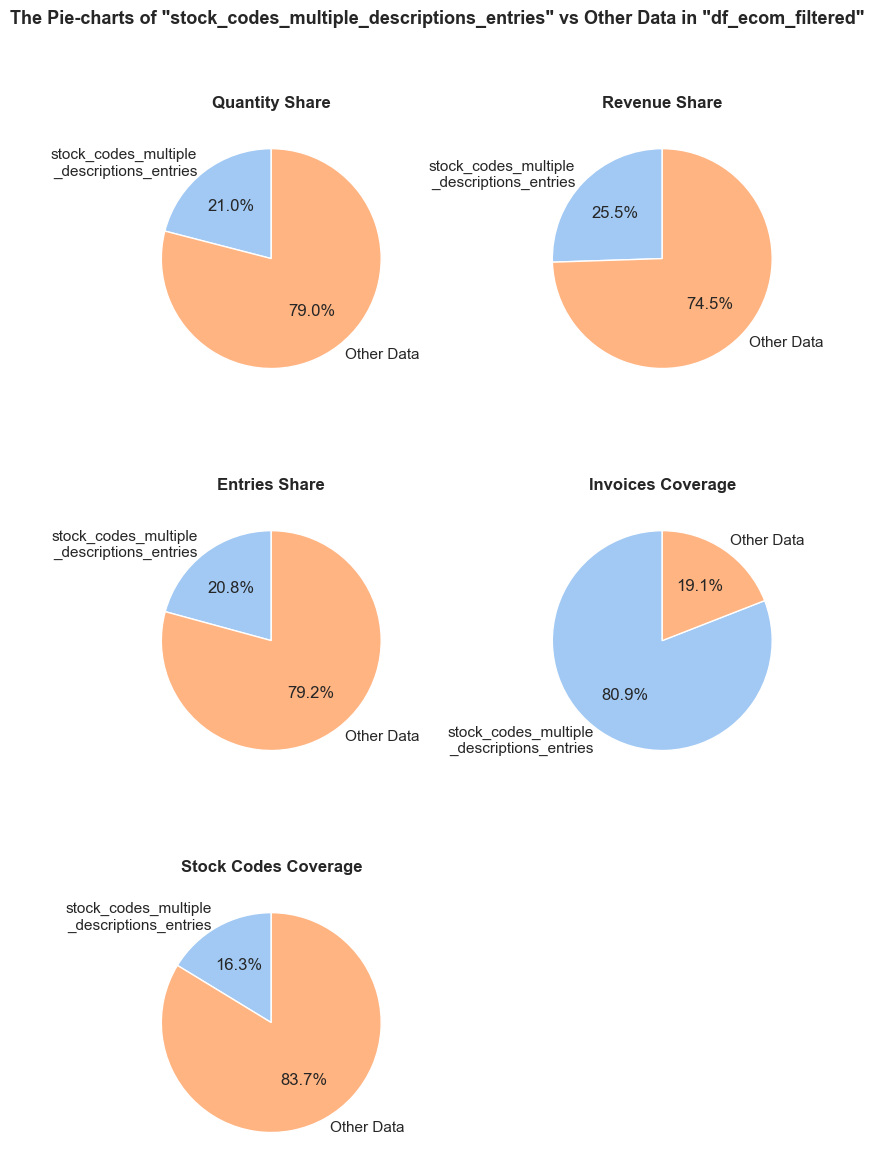

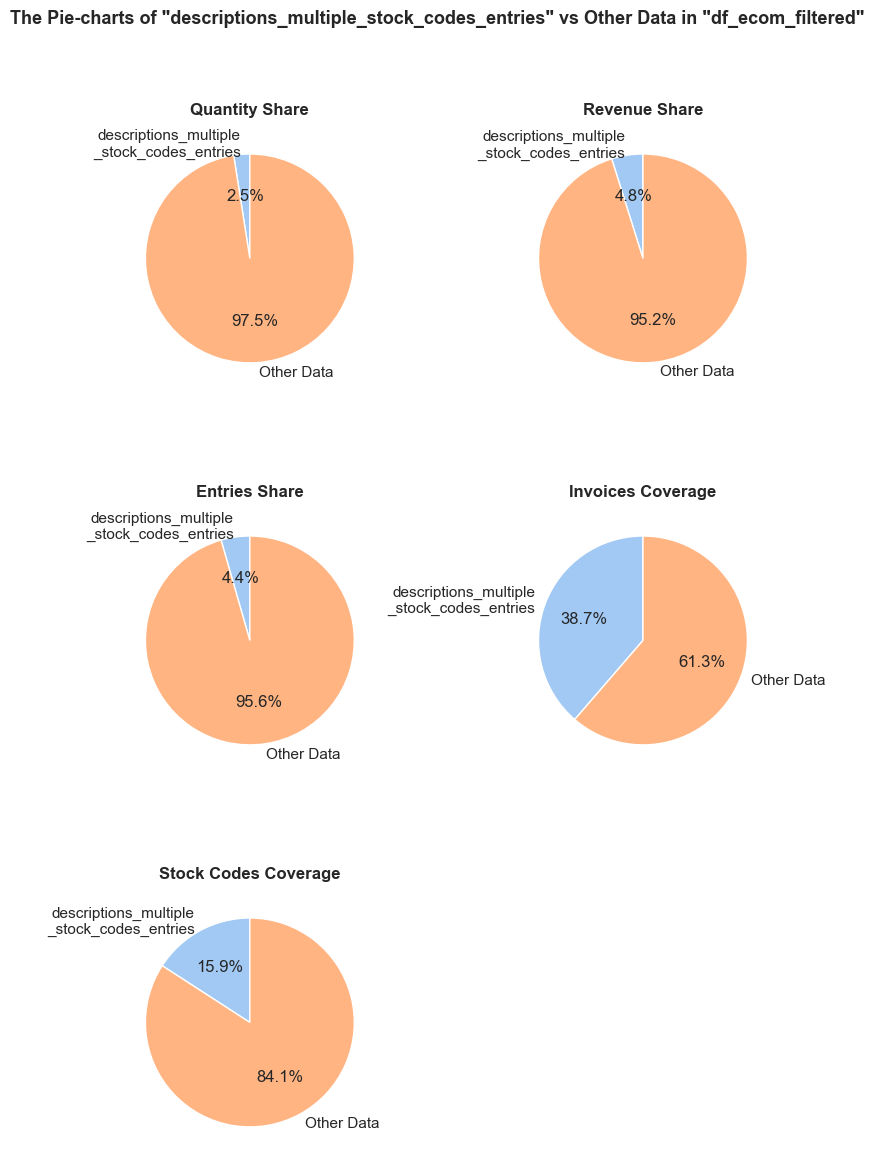

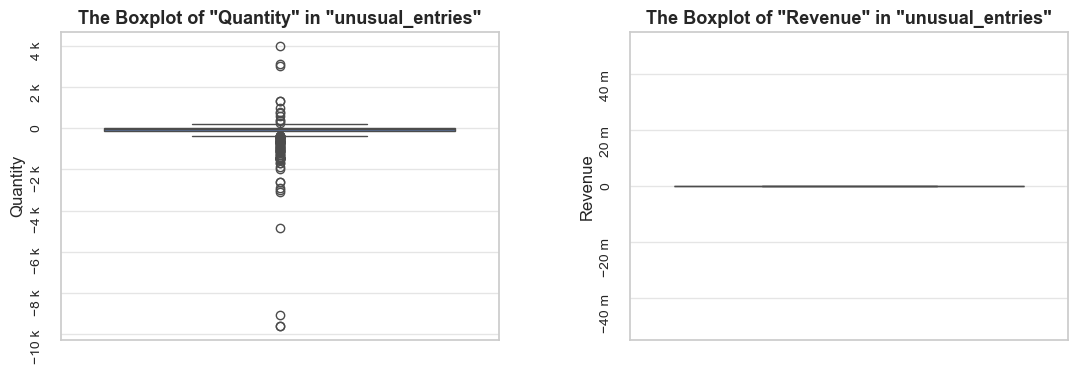

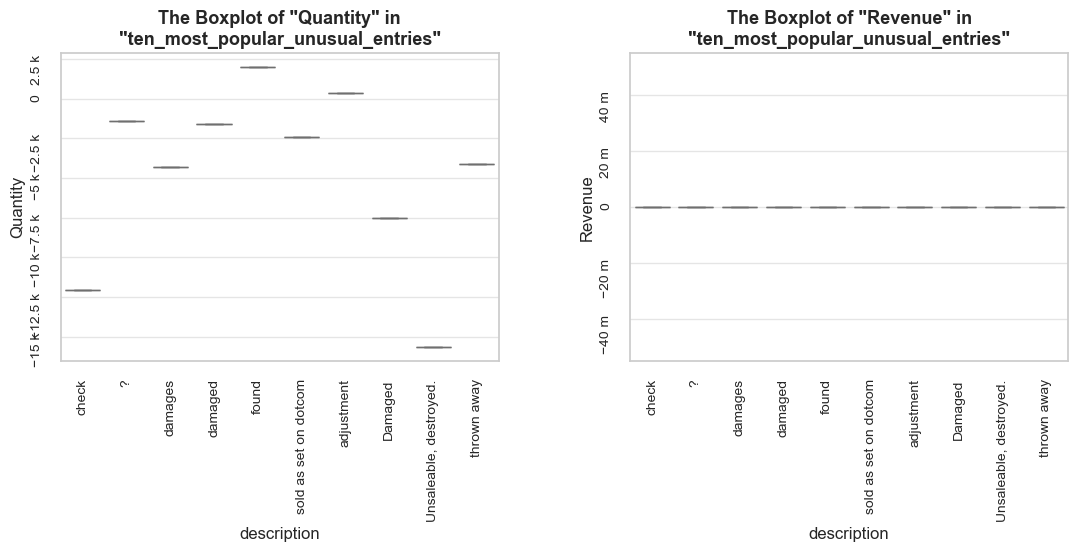

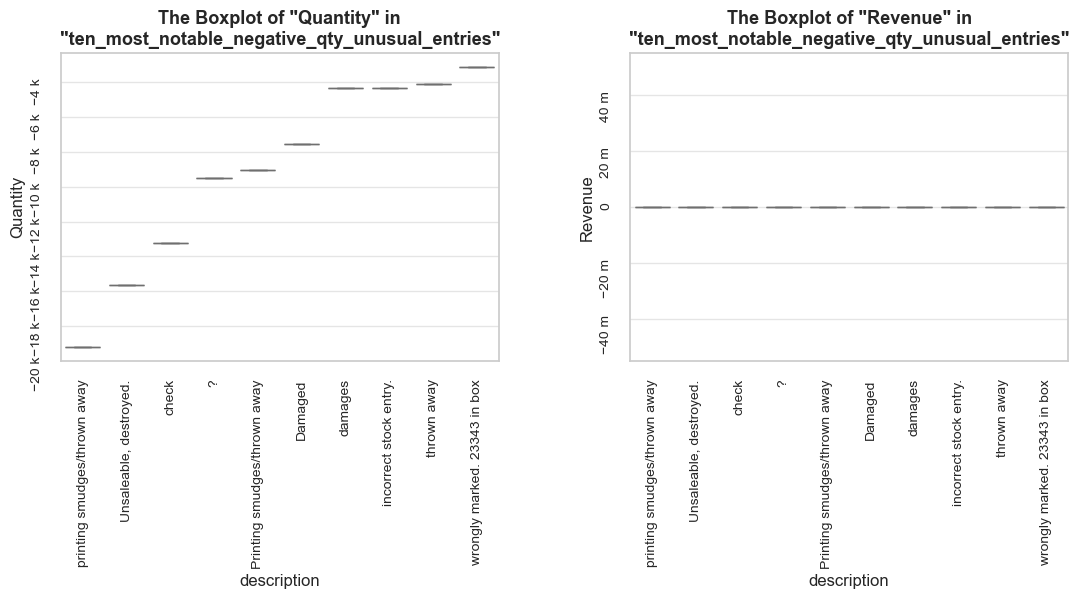

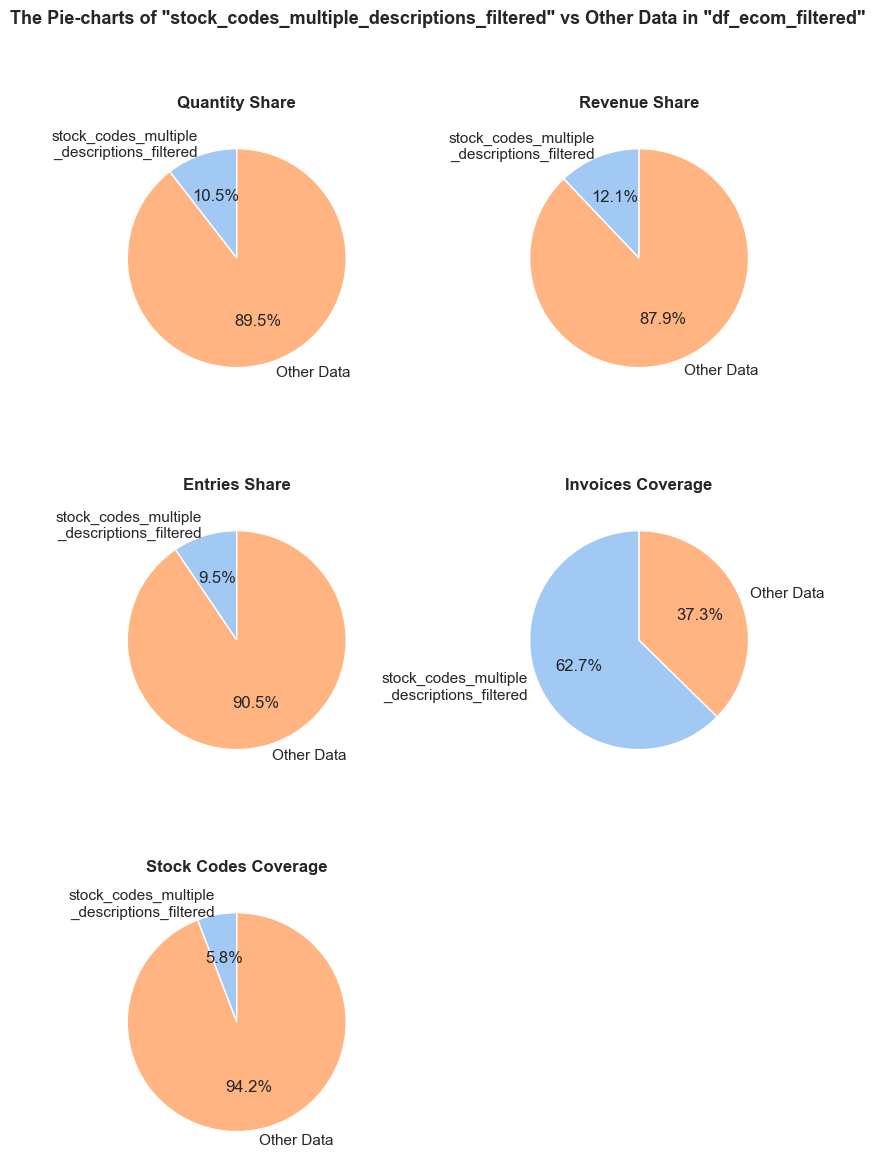

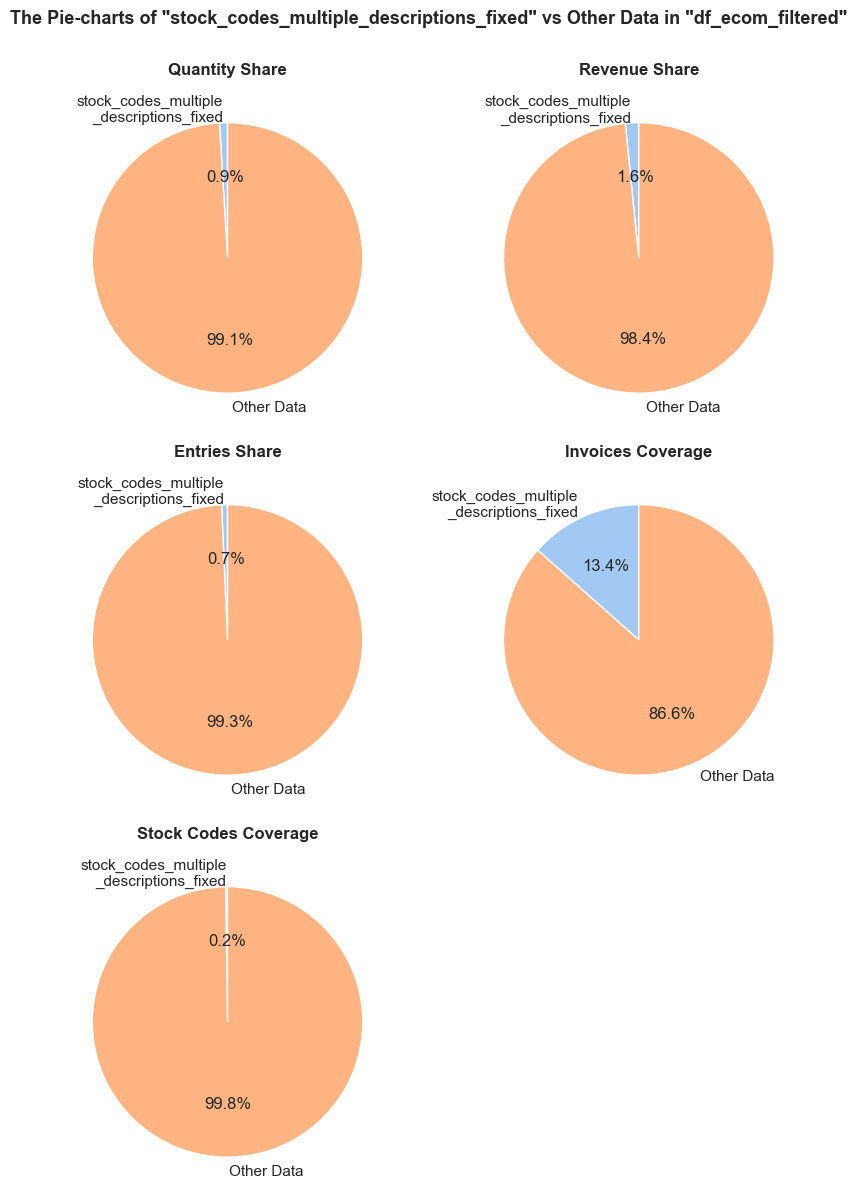

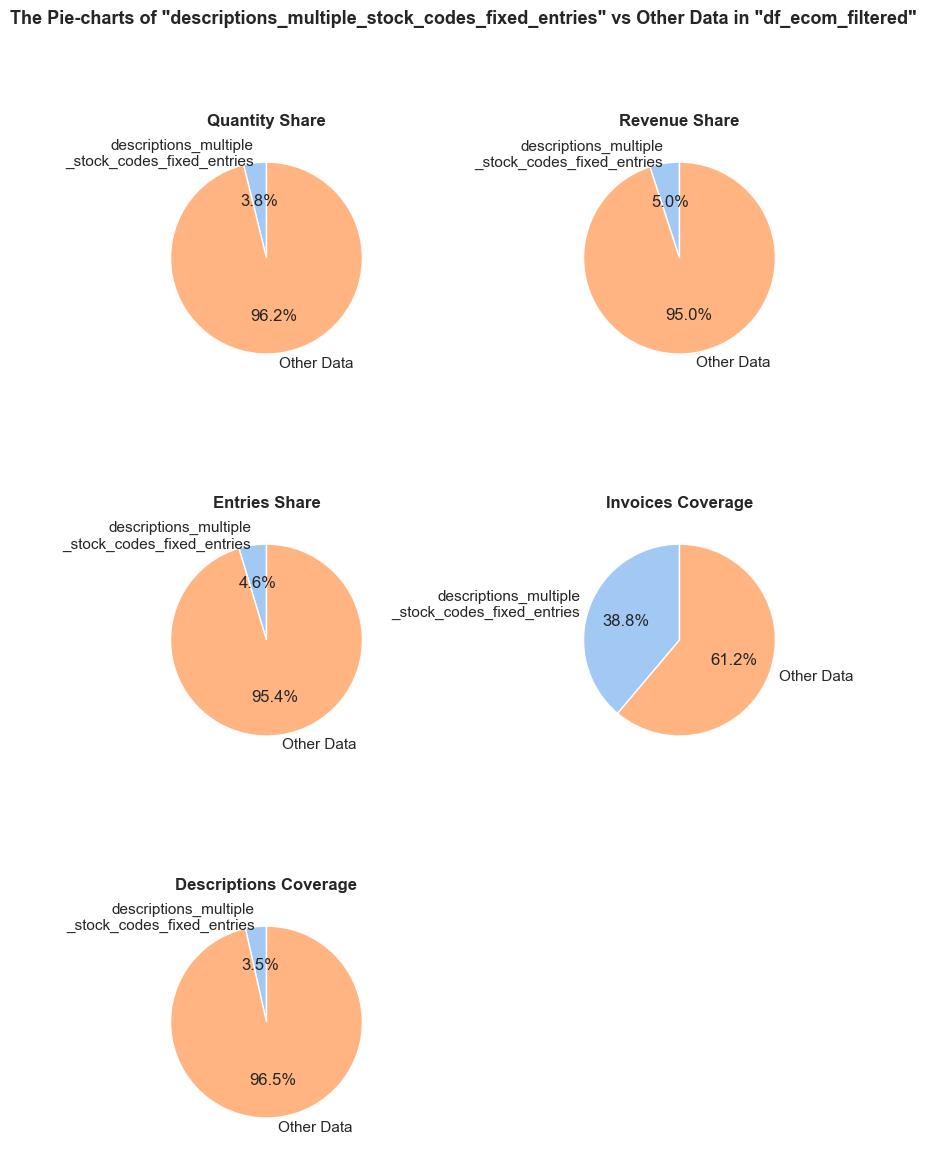

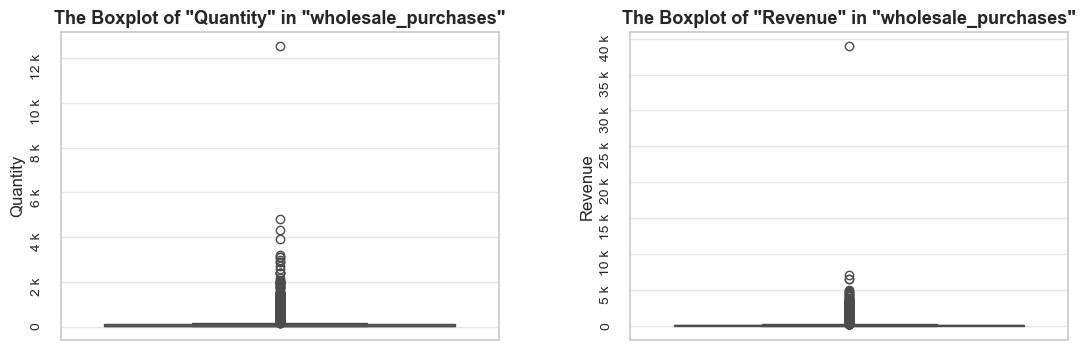

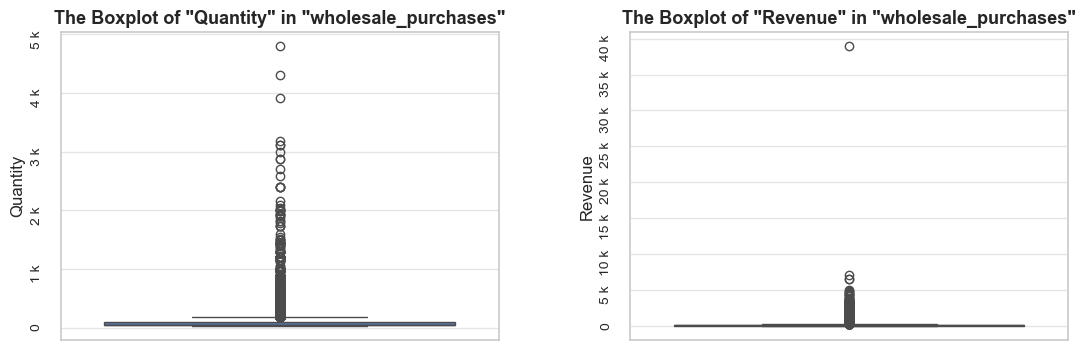

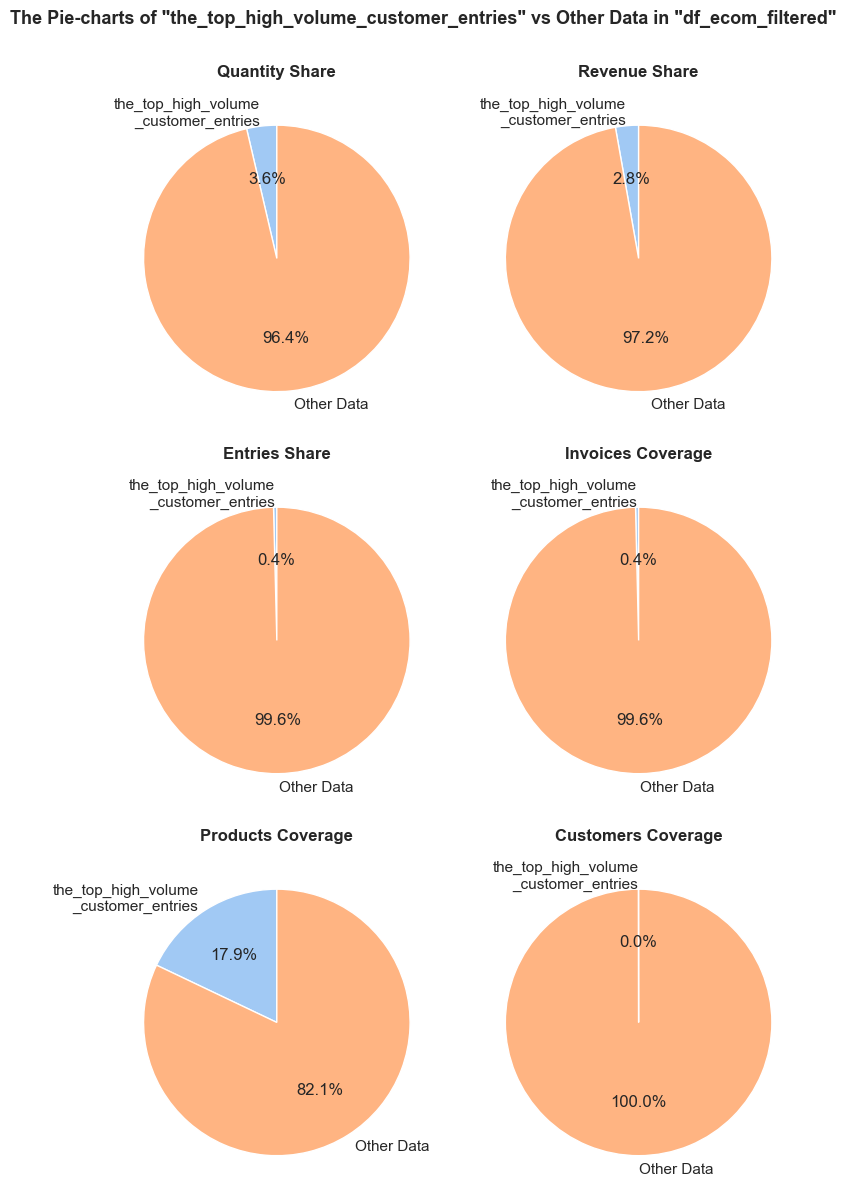

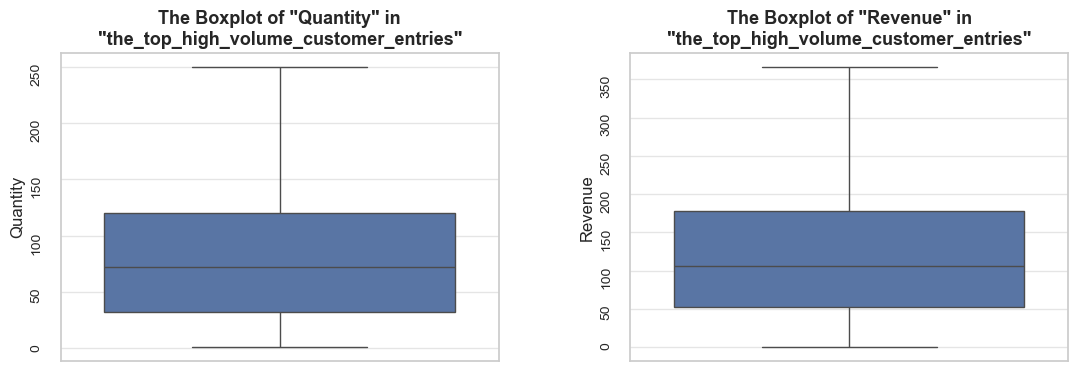

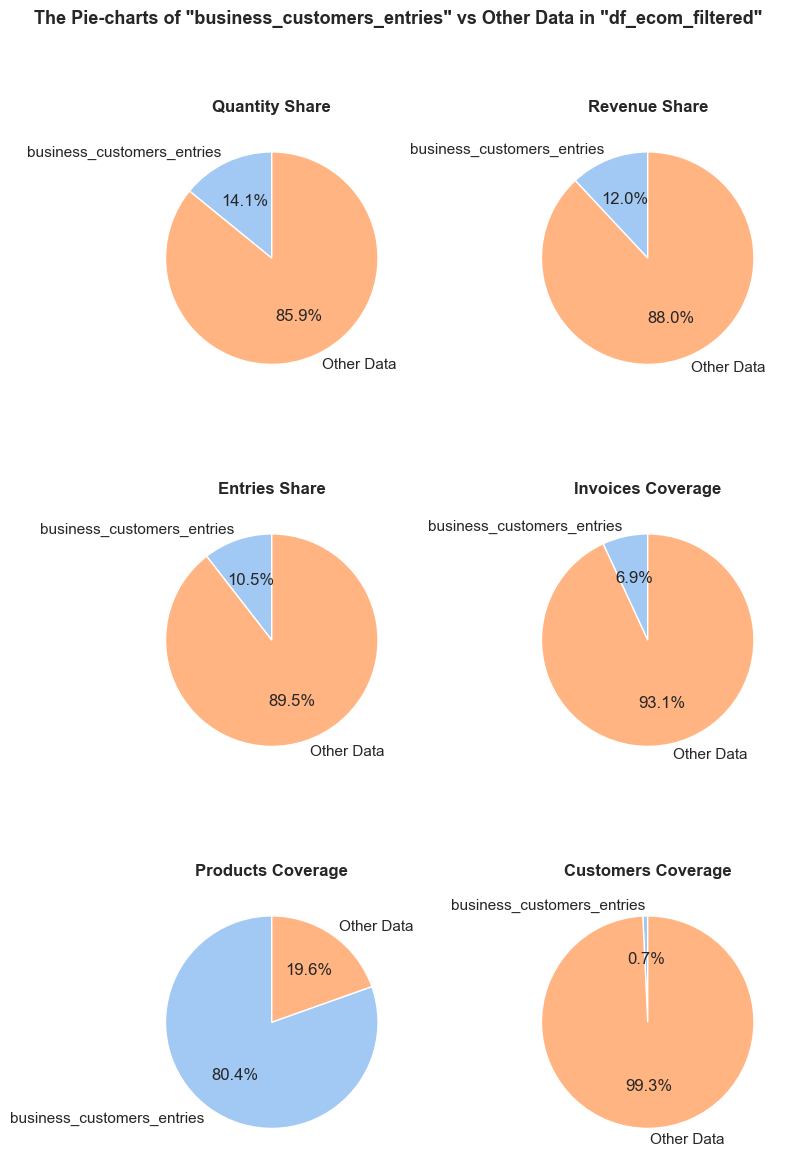

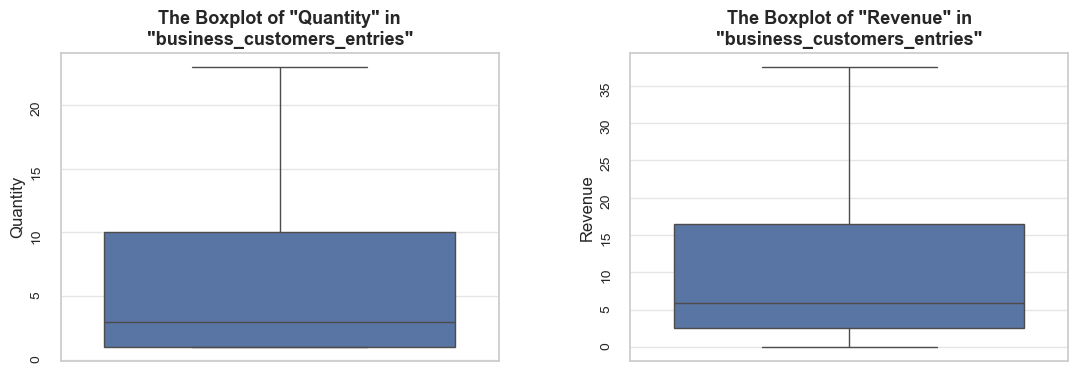

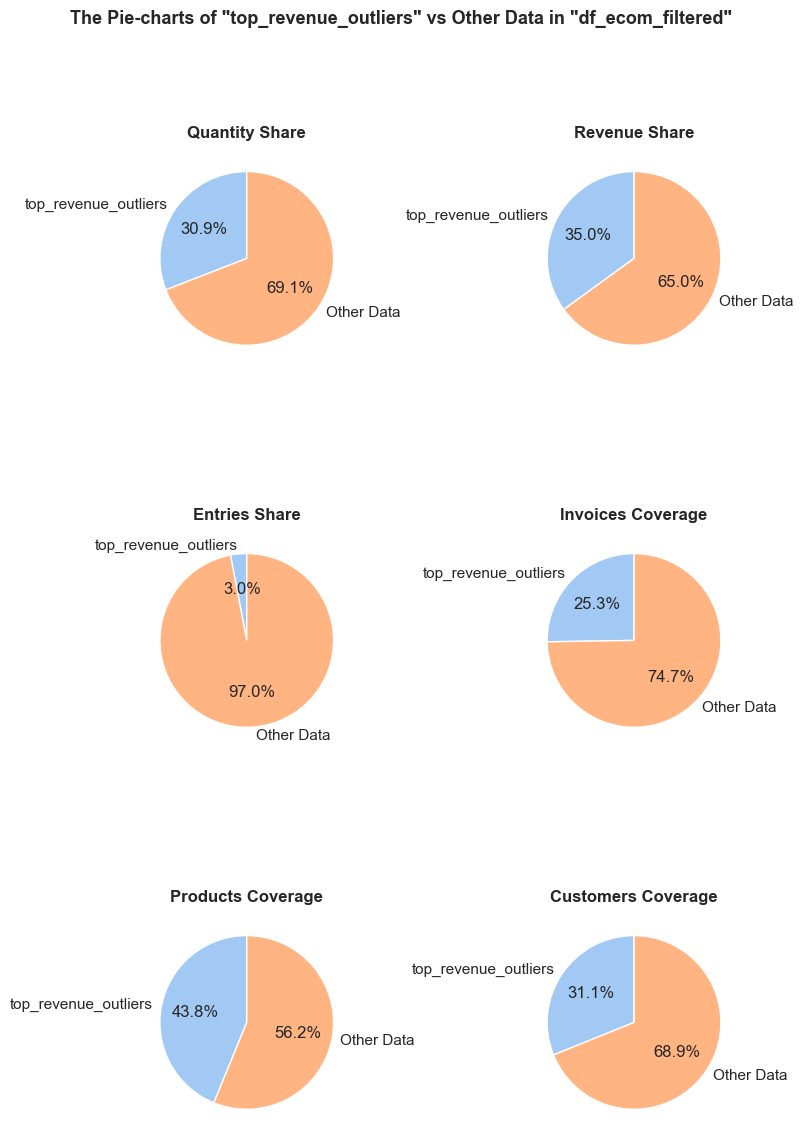

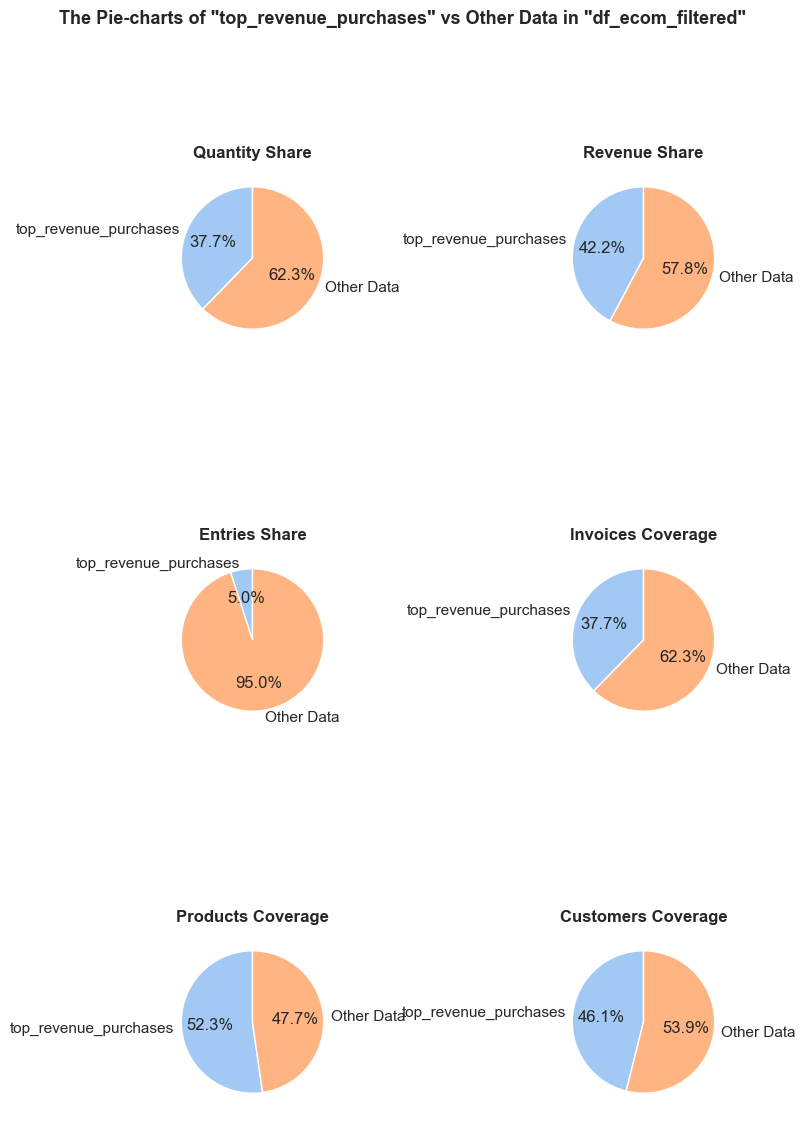

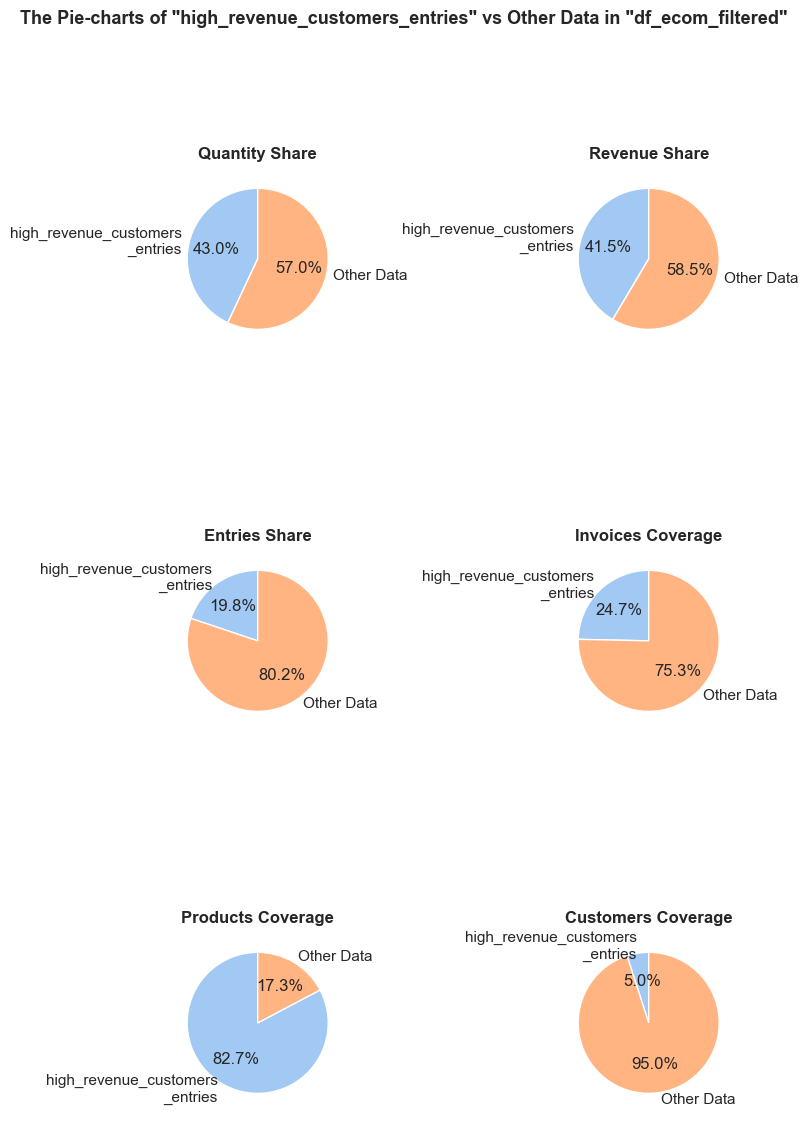

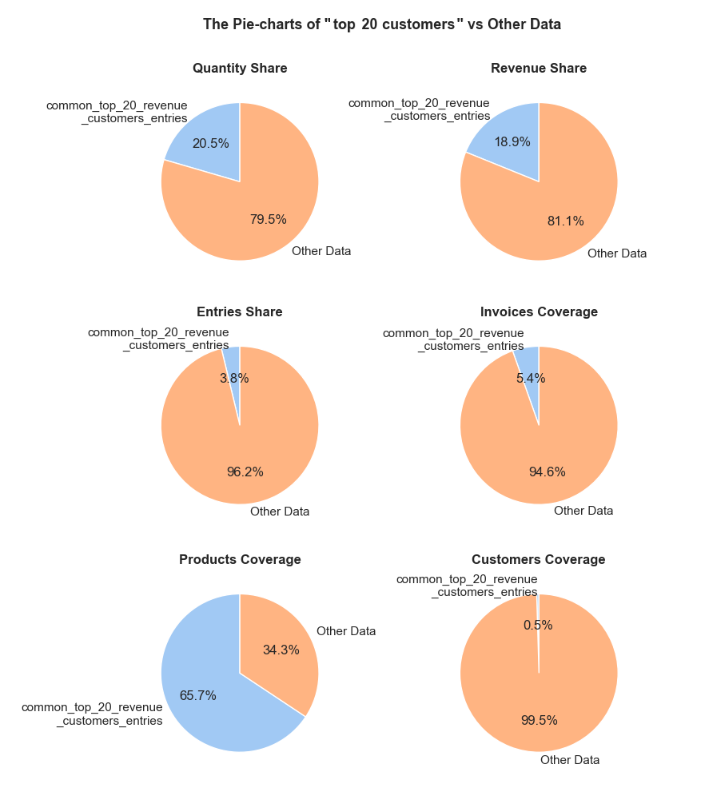

The function evaluates a share and characteristics of a subset of data compared to an initial dataset. It calculates and presents various metrics such as the percentage of entries, share of quantities and revenues (if applicable), invoice period coverage. It also optionally displays examples of a data subset, as well as pie charts and boxplot visualizations of parameters’ share and distributions. This function helps in understanding of a subset impact within a broader dataset, what is especially useful when it comes to decisions about removing irrelevant data.

def share_evaluation(df, initial_df, title_extension='',

show_qty_rev=False,

show_pie_charts=False,

pie_chart_parameters={

('quantity', 'sum'): 'Quantity Share',

('revenue', 'sum'): 'Revenue Share',

('invoice_no', 'count'): 'Entries Share'},

show_pie_charts_notes=True,

show_boxplots=False, boxplots_parameter=None, show_outliers=True,

show_period=False,

show_example=False, example_type='sample', random_state=None, example_limit=5,

frame_len=table_width):

"""

This function evaluates the share and characteristics of a data slice compared to an initial dataset.

It calculates and displays the following metrics for a given data slice:

- Percentage of entries relative to the initial dataset.

- Quantity and revenue totals together with their shares (if `show_qty_rev` is True).

- Pie charts of desired paramerers (if 'show_pie_charts' is True).

- Boxplots of `quantity` and `revenue` (if 'show_boxplots' is True).

- Invoice period coverage (if 'show_period' is True).

- Examples of the data slice (if 'show_example' is True).

As input, the function takes:

- df (DataFrame): a data slice to be evaluated.

- initial_df (DataFrame): an original dataset for comparison. Default - `df_ecom`.

- title_extension (str, optional): additional text to append to the summary and plot titles. Default - an empty string.

- show_qty_rev (bool, optional): whether to display the quantity and revenue figures along with their shares. By default - False.

Note: both datasets must contain a 'revenue' column to display this.

..........

- show_pie_charts (bool, optional): whether to display pie charts. Default - False.

Note: `show_qty_rev` must be True to display this.

- pie_chart_parameters (dict, optional): a dictionary specifying parameters for pie chart creation.

Keys are tuples of (column_name, aggregation_function), and values are strings representing chart names.

Format: {(column_name, aggregation_function): 'Chart Name'}

Default: {('quantity', 'sum'): 'Quantity Share',

('revenue', 'sum'): 'Revenue Share',

('invoice_no', 'count'): 'Entries Share'}

- show_pie_charts_notes (bool, optional): whether to display predefined notes for certain pie charts. By default - True.

Notes are available for: 'Quantity Share', 'Revenue Share', Entries Share', 'Invoices Coverage', 'Stock Codes Coverage',

'Descriptions Coverage', 'Products Coverage' and 'Customers Coverage'.

These notes explain the difference between count-based metrics and coverage-based metrics.

..........

- show_boxplots (bool, optional): whether to display boxplots for quantity and revenue distribution. By default, False.

Note: `show_qty_rev` must be True to display this.

- boxplots_parameter (str, optional): an additional categorical variable for the boxplot if needed.

If yes, the column of `df` must be specified. By default - None.

- show_outliers (bool, optional): whether to display outliers in boxplots. True shows them; False hides them. By default - True.

..........

- show_period (bool, optional): whether to display invoice period coverage. By default - False.

Note: both datasets must contain `invoice_day` and `invoice_month` columns to display this.

..........

- show_example (bool, optional): whether to display examples of the data slice. By default - False.

- example_type (str, optional): type of examples to display ('sample', 'head', 'tail'). By default - 'sample'.

- random_state (int, optional): controls the randomness of sample selection. Default - None.

If provided, ensures consistent results across multiple runs. Default - None.

- example_limit (int, optional): maximum number of examples to display. By default - 5.

..........

- frame_len (int, optional): length of the frame for printed outputs. Default - table_width. If `show_pie_charts` or `show_boxplots` is True, `frame_len` is set to `table_width` (which is defined at the project initiation stage). Else if `show_example` is True, takes the minimum value of `table_width` and manually set `frame_len`.

"""

# adjusting output frame width

if show_pie_charts or show_boxplots:

frame_len = table_width

elif show_example:

frame_len = min(table_width, frame_len)

elif show_period:

frame_len = min(110, frame_len)

# getting DataFrame names

df_name = get_df_name(df) if get_df_name(df) != "name not found" else "the data slice mentioned in the call function"

initial_df_name = get_df_name(initial_df) if get_df_name(initial_df) != "name not found" else "the initial DataFrame"

# calculating basic statistics

share_entries = round(len(df) / len(initial_df) * 100, 1)

# adjusting title extension if needed

title_extension = f' {title_extension}' if title_extension else ''

# printing header

print('='*frame_len)

display(Markdown(f'**Evaluation of share: `{df_name}`{title_extension} in `{initial_df_name}`**\n'))

print('-'*frame_len)

print(f'\033[1mNumber of entries\033[0m: {len(df)} ({share_entries:.1f}% of all entries)\n')

# handling quantity and revenue analysis

if show_qty_rev and ('revenue' not in df.columns or 'quantity' not in initial_df.columns):

print(f'\n\033[1;31mNote\033[0m: For displaying the data on revenues, all datasets must contain the "revenue" column.\n\n'

f'To avoid this message, set: "show_qty_rev=False".')

return

# handling pie-charts and boxplots

if show_qty_rev:

_display_quantity_revenue(df, initial_df)

if show_pie_charts and pie_chart_parameters:

_create_pie_charts(df, initial_df, df_name, initial_df_name,

pie_chart_parameters, show_pie_charts_notes, title_extension, frame_len)

if show_boxplots:

_create_boxplots(df, df_name, boxplots_parameter, show_outliers, title_extension, frame_len)

# handling period coverage

if show_period:

_display_period_coverage(df, initial_df, frame_len)

# handling examples

if show_example:

_display_examples(df, example_type, example_limit, random_state, frame_len)

print('='*frame_len)

def _display_quantity_revenue(df, initial_df):

"""Helper function to display quantity and revenue statistics."""

quantity = df['quantity'].sum()

total_quantity = initial_df['quantity'].sum()

quantity_share = abs(quantity / total_quantity) * 100

revenue = round(df['revenue'].sum(), 1)

total_revenue = initial_df['revenue'].sum()

revenue_share = abs(revenue / total_revenue) * 100

print(f'\033[1mQuantity\033[0m: {quantity} ({quantity_share:.1f}% of the total quantity)')

print(f'\033[1mRevenue\033[0m: {revenue} ({revenue_share:.1f}% of the total revenue)')

def _create_pie_charts(df, initial_df, df_name, initial_df_name, pie_chart_parameters, show_pie_charts_notes, title_extension, frame_len):

"""Helper function to create and display pie charts."""

print('-'*frame_len)

# extracting metrics and names from parameters

metrics_order = []

pie_chart_names = []

agg_dict = {}

for (column, operation), chart_name in pie_chart_parameters.items():

if column not in agg_dict:

agg_dict[column] = []

agg_dict[column].append(operation)

metrics_order.append(f'{column}_{operation}')

pie_chart_names.append(chart_name)

total_metrics = initial_df.agg(agg_dict).abs()

slice_metrics = df.agg(agg_dict).abs()

# flattening metrics while preserving order

total_metrics_flat = []

slice_metrics_flat = []

for column in agg_dict:

for operation in agg_dict[column]:

total_metrics_flat.append(total_metrics[column][operation])

slice_metrics_flat.append(slice_metrics[column][operation])

# checking values and creating pie charts

values_check = True

for metric_name, slice_val, total_val in zip(metrics_order, slice_metrics_flat, total_metrics_flat):

if slice_val > total_val:

print(f'\033[1;31mNote\033[0m: Unable to create pie chart as "{metric_name}" in the "{df_name}" ({slice_val:.0f}) exceeds the total "{metric_name}" ({total_val:.0f}) in the "{initial_df_name}".')

values_check = False

if values_check:

percentages = [100 * slice_metric/total_metric for slice_metric, total_metric in zip(slice_metrics_flat, total_metrics_flat)]

other_percentages = [100 - percent for percent in percentages]

pie_charts_data = {name: [percent, 100-percent]

for name, percent in zip(pie_chart_names, percentages)}

# plotting pie charts

num_charts = len(pie_charts_data)

rows = (num_charts + 1) // 2

fig, axs = plt.subplots(rows, 2, figsize=(8, 4*rows))

axs = axs.flatten() if isinstance(axs, np.ndarray) else [axs]

pie_chart_name = f'Pie-charts' if len(pie_chart_names) > 1 else f'Pie-chart'

fig.suptitle(f'The {pie_chart_name} of "{df_name}"{title_extension} vs Other Data in "{initial_df_name}"', fontsize=13, fontweight='bold', y=1)

colors = sns.color_palette('pastel')

for i, (metric, values) in enumerate(pie_charts_data.items()):

ax = axs[i]

wrapped_names = [wrap_text(name, 25) for name in [df_name, 'Other Data']] # wrapping pie charts labels, if needed

ax.pie(values, labels=wrapped_names, autopct='%1.1f%%', startangle=90, colors=colors)

ax.set_title(f'{metric}', fontsize=12, y=1.02, fontweight='bold')

# removing unused subplots

for i in range(num_charts, len(axs)):

fig.delaxes(axs[i])

plt.tight_layout()

plt.show();

# displaying predefined notes for pie charts if needed

if show_pie_charts_notes and pie_chart_parameters:

notes_to_display = display_pie_charts_notes(pie_chart_parameters.values(), df_name, initial_df_name)

notes_to_display_content = ''

for note in notes_to_display.values():

notes_to_display_content += note + '\n'

# creating collapsible section with notes

notes_html = f'''

<details>

<summary style="color: navy; cursor: pointer;"><b><i>Click to view pie chart explanations</i></b></summary>

<p>

<ul>

{notes_to_display_content}

</ul>

</p>

</details>

'''

display(HTML(notes_html))

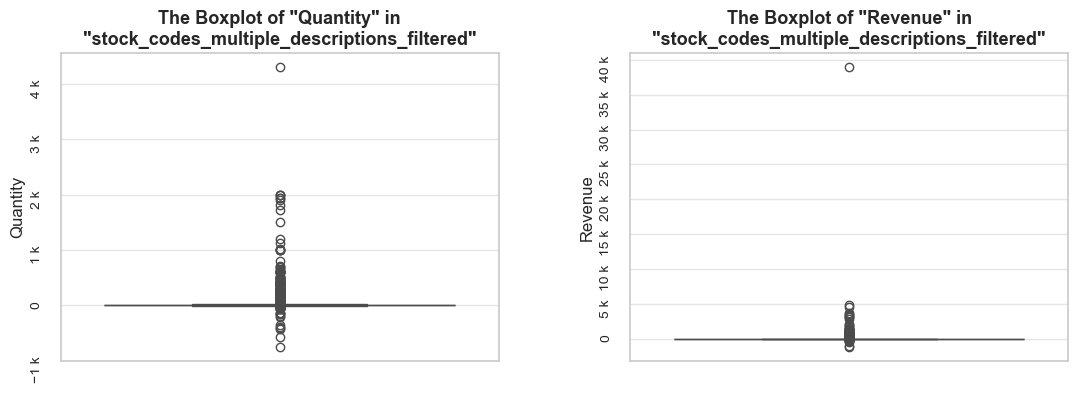

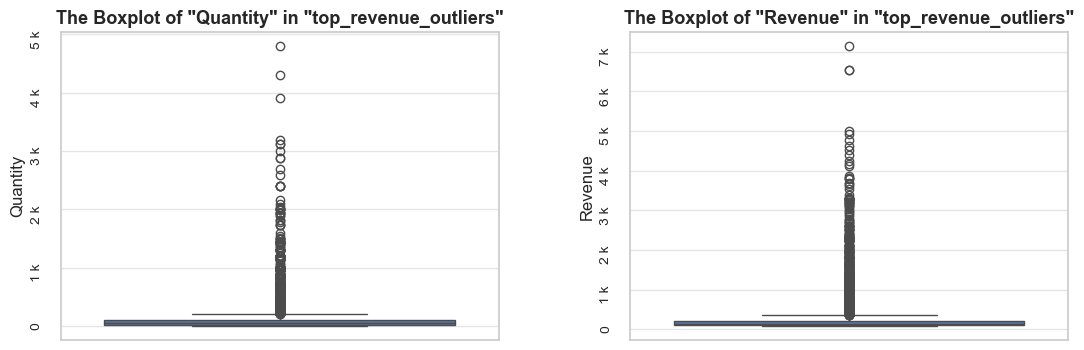

def _create_boxplots(df, df_name, boxplots_parameter, show_outliers, title_extension, frame_len):

"""Helper function to create and display boxplots."""

print('-'*frame_len)

palette=None

if boxplots_parameter:

palette='pastel'

if boxplots_parameter not in df.columns:

print(f'\033[1;31mNote\033[0m: boxplots_parameter "{boxplots_parameter}" is not applied, as it must be a column of "{df_name}" DataFrame.\n'

f'To avoid this message, input a relevant column name or set: "boxplots_parameter=None".')

boxplots_parameter, palette = None, None # avoiding error in the next step when building boxplots

else:

boxplots_parameter_limit = 10 # maximum number of boxes displayed within one graph

boxplots_parameter_number = df[boxplots_parameter].nunique() # the number of unique values of boxplots_parameter

if boxplots_parameter_number > boxplots_parameter_limit:

print(f'\033[1;31mNote\033[0m: `boxplots_parameter` "{boxplots_parameter}" is not applied, as the number of its unique values exceeds the threshold of {boxplots_parameter_limit}.\n'

f'To avoid this message, input another data slice or another `boxplots_parameter` with values number under the threshold level, or set: "boxplots_parameter=None."')

boxplots_parameter, palette = None, None # avoiding error in the next step when building boxplots

fig, axes = plt.subplots(1, 2, figsize=(13, 4))

for i, metric in enumerate(['quantity', 'revenue']):

sns.boxplot(data=df, x=boxplots_parameter, hue=boxplots_parameter, y=metric,

showfliers=show_outliers, ax=axes[i], palette=palette)

# removing legend if it exists

legend = axes[i].get_legend()

if legend is not None:

legend.remove()

title = f'The Boxplot of "{metric.title()}" in "{df_name}"{title_extension}'

#wrapped_title = '\n'.join(textwrap.wrap(title, width=55))

wrapped_title = wrap_text(title, 55)

# REMOVE THIS LINE: axes[i].get_legend().remove()

axes[i].set_title(wrapped_title, fontsize=13, fontweight ='bold')

axes[i].set_xlabel(boxplots_parameter, fontsize=12)

axes[i].set_ylabel(metric.title(), fontsize=12)

axes[i].tick_params(labelsize=10, rotation=90)

axes[i].yaxis.set_major_formatter(EngFormatter())

plt.subplots_adjust(wspace=0.3)

plt.show();

def _display_period_coverage(df, initial_df, frame_len):

"""Helper function to display period coverage information."""

print('-'*frame_len)

required_columns = {'invoice_day', 'invoice_month'}

if not (required_columns.issubset(df.columns) and required_columns.issubset(initial_df.columns)):

print(f'\n\033[1;31mNote\033[0m: For displaying the invoice period coverage, all datasets must contain '

f'the "invoice_day" and "invoice_month" columns.\n'

f'To avoid this message, set: "show_period=False".')

return

first_invoice_day = df['invoice_day'].min()

if pd.isnull(first_invoice_day):

print('\033[1mInvoice period coverage:\033[0m does not exist')

return

# calculating periods

last_invoice_day = df['invoice_day'].max()

invoice_period = 1 if first_invoice_day == last_invoice_day else (last_invoice_day - first_invoice_day).days

total_period = (initial_df['invoice_day'].max() - initial_df['invoice_day'].min()).days

period_share = invoice_period / total_period * 100

invoice_months_count = math.ceil(df['invoice_month'].nunique())

total_period_months_count = math.ceil(initial_df['invoice_month'].nunique())

print(f'\033[1mInvoice period coverage:\033[0m {first_invoice_day} - {last_invoice_day} '

f'({period_share:.1f}%; {invoice_period} out of {total_period} total days; '

f'{invoice_months_count} out of {total_period_months_count} total months)')

def _display_examples(df, example_type, example_limit, random_state, frame_len):

"""Helper function to display examples from the dataset."""

print('-'*frame_len)

example_methods = {

'sample': lambda df: df.sample(n=min(example_limit, len(df)), random_state=random_state),

'head': lambda df: df.head(min(example_limit, len(df))),

'tail': lambda df: df.tail(min(example_limit, len(df)))}

example_messages = {

'sample': 'Random examples',

'head': 'Top rows',

'tail': 'Bottom rows'}

message = example_messages.get(example_type)

method = example_methods.get(example_type)

print(f'\033[1m{message}:\033[0m\n')

print(method(df))

def display_pie_charts_notes(pie_chart_names, df_name, initial_df_name):

"""Helper function to display notes for pie charts."""

specific_notes = {

'Quantity Share': (f'The <strong>"Quantity Share"</strong> pie chart represents the proportion of total item quantities, '

f'showing what percentage of all quantities in <code>{initial_df_name}</code> falls into <code>{df_name}</code>.'),

'Revenue Share': (f'The <strong>"Revenue Share"</strong> pie chart represents the proportion of total revenue, '

f'showing what percentage of all revenue in <code>{initial_df_name}</code> is generated in <code>{df_name}</code>.'),

'Entries Share': (f'The <strong>"Entries Share"</strong> pie chart represents the share of total entries (purchases), '

f'showing what percentage of all individual product purchases in <code>{initial_df_name}</code> occurs in <code>{df_name}</code>. '

f'Every entry is counted separately, even if they are associated with the same order.'),

'Invoices Coverage': (f'The <strong>"Invoices Coverage"</strong> pie chart shows the coverage of distinct invoices (orders). '

f'This metric may show a larger share than count-based metrics because it represents order range coverage '

f'rather than purchases volume. For example, if an order appears in 100 entries in total but only 1 entry '

f'falls into <code>{df_name}</code>, it still counts as one full unique order in this chart.'),

'Stock Codes Coverage': (f'The <strong>"Stock Codes Coverage"</strong> pie chart shows the coverage of distinct stock codes. '

f'This metric may show a larger share than count-based metrics because it represents stock code range coverage '

f'rather than purchases volume. For example, if a stock code appears in 100 entries in total but only 1 entry '

f'falls into <code>{df_name}</code>, it still counts as one full unique stock code in this chart.'),

'Descriptions Coverage': (f'The <strong>"Descriptions Coverage"</strong> pie chart shows the coverage of distinct product descriptions. '

f'This metric may show a larger share than count-based metrics because it represents description range coverage '

f'rather than purchases volume. For example, if a description appears in 100 entries in total but only 1 entry '

f'falls into <code>{df_name}</code>, it still counts as one full unique description in this chart.'),

'Products Coverage': (f'The <strong>"Products Coverage"</strong> pie chart shows the coverage of distinct products. '

f'This metric may show a larger share than count-based metrics because it represents product range coverage '

f'rather than purchases volume. For example, if a product appears in 100 entries in total but only 1 entry '

f'falls into <code>{df_name}</code>, it still counts as one full unique product in this chart.'),

'Customers Coverage': (f'The <strong>"Customers Coverage"</strong> pie chart shows the coverage of distinct customer IDs. '

f'This metric may show a larger share than count-based metrics because it represents customer reach '

f'rather than purchases volume. For example, if a customer made 50 purchases but only 1 purchase falls into '

f'<code>{df_name}</code>, they still count as one full unique customer in this chart.')}

# getting only the notes for charts that were actually displayed

notes_to_display = {}

for name in pie_chart_names:

if name in specific_notes:

notes_to_display[name] = f'<li><i>{specific_notes[name]}</i></li>' # creating dynamic formatted HTML list of notes

return notes_to_displayFunction: wrap_text

The function wraps text into multiple lines, ensuring each line is within the specified width, while leaving shorter text unchanged. It distinguishes between text in “snake_case” format and ordinary text with words separated by spaces, treating each format appropriately.

def wrap_text(text, max_width=25):

"""

Wraps a given text into multiple lines ensuring that each line doesn't exceed `max_width`.

If the text follows "snake_case" format it is wrapped at underscores.

Otherwise it is wrapped at spaces between words (useful e.g. for notes that must be limited in string length)

Input:

- text (str): a text to be wrapped.

- max_width (int): maximum line width. Default - 25.

Output:

- The wrapped text (str)

"""

# handling text that in "snake_case" format (e.g. labels for charts)

if _is_snake_case(text):

if len(text) <= max_width:

return text

parts = text.split('_')

wrapped = []

current_line = ''

for part in parts:

if len(current_line) + len(part) <= max_width:

current_line = f'{current_line}_{part}' if current_line else part

else:

wrapped.append(current_line)

current_line = f'_{part}'

if current_line: # appending the last line

wrapped.append(current_line)

return '\n'.join(wrapped)

# handling text separated by spaces (e.g. for notes that must be limited in string length)

else:

return '\n'.join(textwrap.wrap(text, width=max_width))

def _is_snake_case(text):

pattern = r'^[a-z0-9]+(_[a-z0-9]+)*$'

return bool(re.match(pattern, text))# checking `InvoiceNo` column - whether it contains only integers

try:

df_ecom['InvoiceNo'] = df_ecom['InvoiceNo'].astype(int)

contains_only_integers = True

except ValueError:

contains_only_integers = False

print(f'\033[1mThe `InvoiceNo` column contains integers only:\033[0m {contains_only_integers}')The `InvoiceNo` column contains integers only: False

Observations and Decisions

InvoiceNo and CustomerID columns contain not only integers, so we will leave their original data types as they are by now.CustomerID data type from float to string after addressing the missing values in this column.InvoiceDate column only.Implementation of Decisions

df_ecom['InvoiceDate'] = pd.to_datetime(df_ecom['InvoiceDate'])# converting camelCase to snake_case format (which in my opinion looks more lucid)

def camel_to_snake(name):

c_to_s = re.sub('([a-z0-9])([A-Z])', r'\1_\2', name)

return c_to_s.lower()

df_ecom.columns = [camel_to_snake(column) for column in df_ecom.columns]

df_ecom.columnsIndex(['invoice_no', 'stock_code', 'description', 'quantity', 'invoice_date', 'unit_price', 'customer_id'], dtype='object')# investigating negative values in `quantity` column

negative_qty_df = df_ecom[df_ecom['quantity'] < 0].copy()

share_evaluation(negative_qty_df, initial_df=df_ecom, show_qty_rev=False, show_boxplots=True, show_period=False,

show_example=True, example_type='sample', example_limit=3)======================================================================================================================================================Evaluation of share: negative_qty_df in df_ecom

------------------------------------------------------------------------------------------------------------------------------------------------------ Number of entries: 10624 (2.0% of all entries) ------------------------------------------------------------------------------------------------------------------------------------------------------ Random examples: invoice_no stock_code description quantity invoice_date unit_price customer_id 109345 545599 22272 NaN -140 2019-03-02 11:17:00 0.00 NaN 80662 C543050 22962 JAM JAR WITH PINK LID -2 2019-02-01 10:14:00 0.85 12625.00 205953 554852 21272 ? -212 2019-05-25 09:24:00 0.00 NaN ======================================================================================================================================================

# investigating negative values in `UnitPrice` column

negative_unit_price_df = df_ecom[df_ecom['unit_price'] < 0]

share_evaluation(negative_unit_price_df, initial_df=df_ecom, show_qty_rev=False, show_period=False,

show_example=True, example_type='sample', example_limit=3)======================================================================================================================================================Evaluation of share: negative_unit_price_df in df_ecom

------------------------------------------------------------------------------------------------------------------------------------------------------ Number of entries: 2 (0.0% of all entries) ------------------------------------------------------------------------------------------------------------------------------------------------------ Random examples: invoice_no stock_code description quantity invoice_date unit_price customer_id 299983 A563186 B Adjust bad debt 1 2019-08-10 14:51:00 -11062.06 NaN 299984 A563187 B Adjust bad debt 1 2019-08-10 14:52:00 -11062.06 NaN ======================================================================================================================================================

Observations and Decisions

Implementation of Decisions

# getting rid of negative unit prices

df_ecom = data_reduction(df_ecom, lambda df: df.query('unit_price >= 0'))Number of entries cleaned out from the "df_ecom": 2 (0.0%)

# investigating missing values in the `customer_id` column

missing_customer_id = df_ecom[df_ecom['customer_id'].isna()]

share_evaluation(missing_customer_id, initial_df=df_ecom, show_qty_rev=False, show_period=False,

show_example=True, example_type='sample', example_limit=5)======================================================================================================================================================Evaluation of share: missing_customer_id in df_ecom

------------------------------------------------------------------------------------------------------------------------------------------------------ Number of entries: 135078 (24.9% of all entries) ------------------------------------------------------------------------------------------------------------------------------------------------------ Random examples: invoice_no stock_code description quantity invoice_date unit_price customer_id 2658 536592 22142 CHRISTMAS CRAFT WHITE FAIRY 1 2018-11-29 17:06:00 3.36 NaN 394107 570871 21670 BLUE SPOT CERAMIC DRAWER KNOB 1 2019-10-10 16:36:00 3.29 NaN 62392 541497 22248 DECORATION PINK CHICK MAGIC GARDEN 5 2019-01-16 15:19:00 0.79 NaN 247932 558777 22211 WOOD STAMP SET FLOWERS 2 2019-07-02 10:23:00 0.83 NaN 124344 546974 22141 CHRISTMAS CRAFT TREE TOP ANGEL 1 2019-03-16 12:08:00 4.13 NaN ======================================================================================================================================================

# investigating missing values in the `description` column

missing_descriptions = df_ecom[df_ecom['description'].isna()]

share_evaluation(missing_descriptions, initial_df=df_ecom, show_qty_rev=False, show_period=False,

show_example=True, example_type='sample', random_state=7, example_limit=5)

missing_descriptions_qty = missing_descriptions['quantity'].sum()

missing_descriptions_qty_share = abs( missing_descriptions_qty/ df_ecom['quantity'].sum())

print(f'\033[1mQuantity in the entries with missing descriptions:\033[0m {missing_descriptions_qty} ({missing_descriptions_qty_share *100 :0.1f}% of the total quantity).\n')======================================================================================================================================================Evaluation of share: missing_descriptions in df_ecom

------------------------------------------------------------------------------------------------------------------------------------------------------ Number of entries: 1454 (0.3% of all entries) ------------------------------------------------------------------------------------------------------------------------------------------------------ Random examples: invoice_no stock_code description quantity invoice_date unit_price customer_id 74287 542417 84966B NaN -11 2019-01-25 17:38:00 0.00 NaN 250532 559037 82583 NaN 10 2019-07-03 15:29:00 0.00 NaN 171180 551394 16015 NaN 400 2019-04-26 12:37:00 0.00 NaN 468448 576473 21868 NaN -108 2019-11-13 11:40:00 0.00 NaN 201752 554316 21195 NaN -1 2019-05-21 15:29:00 0.00 NaN ====================================================================================================================================================== Quantity in the entries with missing descriptions: -13609 (0.3% of the total quantity).

Observations

customer_id column consists of ~25% missing values; this might reflect guest checkouts or unregistered users.description column has 0.3% missing values, which account for 0.3% of the total quantity. According to sample entries, these missing values might be associated with data corrections, as the unit price is zero and many entries have a negative quantity.Decisions

customer_id is not crucial for our study, and considering that a substantial portion of the data (~1/4) is affected by missing values in this column, we won’t discard these records. Instead, we will convert the missing values incustomer_id column to zeros to ensure proper data processing. As decided above we will convert the float data type to string.Implementation of Decisions

# converting the missing values to zeros in the `customer_id` column

df_ecom = df_ecom.copy() # avoiding SettingWithCopyWarning

df_ecom['customer_id'] = df_ecom['customer_id'].fillna(0)# converting the `customer_id` column to string type (first we convert the float to an integer, dropping any decimal places in naming).

df_ecom['customer_id'] = df_ecom['customer_id'].astype(int).astype(str) # discarding records with missing descriptions

df_ecom = data_reduction(df_ecom, lambda df: df.dropna(subset=['description']))Number of entries cleaned out from the "df_ecom": 1454 (0.3%)

As expected, after converting the missing values to zeros in the customer_id column, the float type was successfully converted to integer.

# checking duplicates

duplicates = df_ecom[df_ecom.duplicated()]

share_evaluation(duplicates, initial_df=df_ecom, show_qty_rev=False, show_period=False,

show_example=True, example_type='head', example_limit=5)======================================================================================================================================================Evaluation of share: duplicates in df_ecom

------------------------------------------------------------------------------------------------------------------------------------------------------ Number of entries: 5268 (1.0% of all entries) ------------------------------------------------------------------------------------------------------------------------------------------------------ Top rows: invoice_no stock_code description quantity invoice_date unit_price customer_id 517 536409 21866 UNION JACK FLAG LUGGAGE TAG 1 2018-11-29 11:45:00 1.25 17908 527 536409 22866 HAND WARMER SCOTTY DOG DESIGN 1 2018-11-29 11:45:00 2.10 17908 537 536409 22900 SET 2 TEA TOWELS I LOVE LONDON 1 2018-11-29 11:45:00 2.95 17908 539 536409 22111 SCOTTIE DOG HOT WATER BOTTLE 1 2018-11-29 11:45:00 4.95 17908 555 536412 22327 ROUND SNACK BOXES SET OF 4 SKULLS 1 2018-11-29 11:49:00 2.95 17920 ======================================================================================================================================================

# getting rid of duplicates

df_ecom = data_reduction(df_ecom, lambda df: df.drop_duplicates())Number of entries cleaned out from the "df_ecom": 5268 (1.0%)

# adding extra period-related columns

df_ecom['invoice_year'] = df_ecom['invoice_date'].dt.year

df_ecom['invoice_month'] = df_ecom['invoice_date'].dt.month

df_ecom['invoice_year_month'] = df_ecom['invoice_date'].dt.strftime('%Y-%m')

df_ecom['invoice_week'] = df_ecom['invoice_date'].dt.isocalendar().week

df_ecom['invoice_year_week'] = df_ecom['invoice_date'].dt.strftime('%G-Week-%V')

df_ecom['invoice_day'] = df_ecom['invoice_date'].dt.date

df_ecom['invoice_day_of_week'] = df_ecom['invoice_date'].dt.weekday

df_ecom['invoice_day_name'] = df_ecom['invoice_date'].dt.day_name()

df_ecom['revenue'] = df_ecom['unit_price'] * df_ecom['quantity']

# checking the result

df_ecom.sample(3)| invoice_no | stock_code | description | quantity | invoice_date | unit_price | customer_id | invoice_year | invoice_month | invoice_year_month | invoice_week | invoice_year_week | invoice_day | invoice_day_of_week | invoice_day_name | revenue | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 253212 | 559162 | 22470 | HEART OF WICKER LARGE | 1 | 2019-07-04 16:29:00 | 5.79 | 0 | 2019 | 7 | 2019-07 | 27 | 2019-Week-27 | 2019-07-04 | 3 | Thursday | 5.79 |

| 216795 | 555854 | 21192 | WHITE BELL HONEYCOMB PAPER | 1 | 2019-06-05 13:47:00 | 1.65 | 12748 | 2019 | 6 | 2019-06 | 23 | 2019-Week-23 | 2019-06-05 | 2 | Wednesday | 1.65 |

| 272645 | 560773 | 22135 | MINI LADLE LOVE HEART PINK | 3 | 2019-07-18 16:17:00 | 0.83 | 0 | 2019 | 7 | 2019-07 | 29 | 2019-Week-29 | 2019-07-18 | 3 | Thursday | 2.49 |

We set two primary objectives for the EDA part of the project:

Let’s note here, that the focused Product Range Analysis will be conducted in the next phase, utilizing the data cleaned at this EDA stage.

Given the complexity of our study, we will arrange the plan for each component of EDA, describing parameters and study methods.

Parameters to study

Distribution analysis

Top performers analysis

Methods of study

distribution_IQR function will be handy for this purpose.share_evaluation function for this purpose.plot_totals_distribution function for this purpose.⚠ Note: despite some parts of our distribution analysis (like mutually exclusive entries or high-volume customers) go beyond common distribution analysis, keeping them here is reasonable as they provide early insights meaningful for later stages.

invoice_no)

stock_code) and Item Name (description)

invoice_no and stock_code to detect operational or non-product entries. We will filter those, containing letters (during initial data inspection we detected that invoice_no and stock_code columns contain not only integers).⚠ Note: *The identifiers analysis may be integrated into the distribution analysis**, if we find that deeper investigation of identifiers is necessary at that stage.*

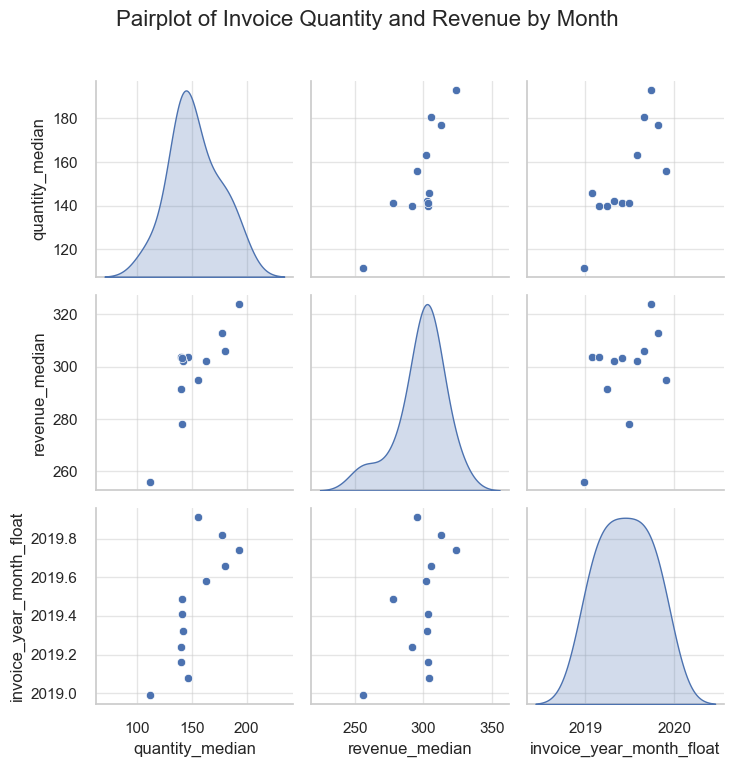

Parameters’ totals and typical unit price by month

Invoice parameters by month

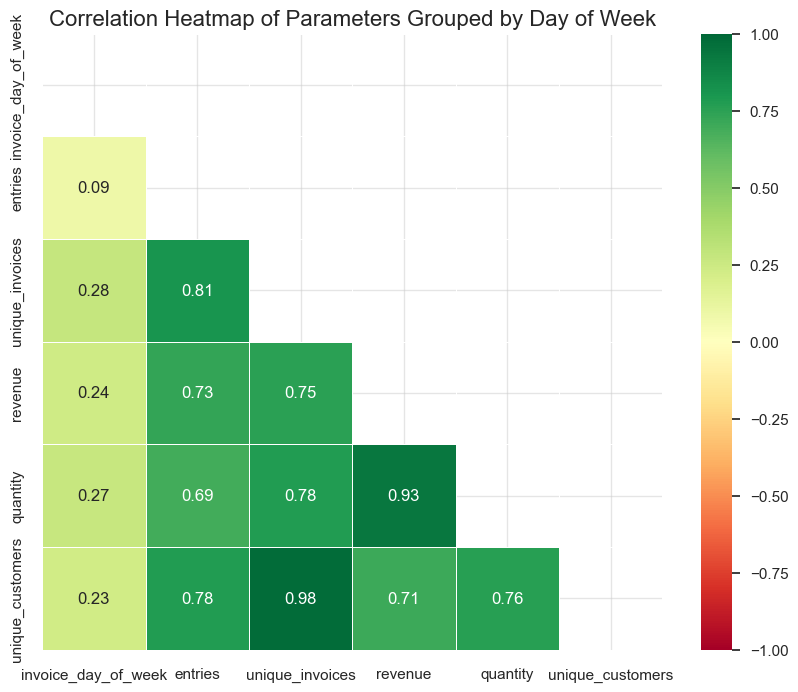

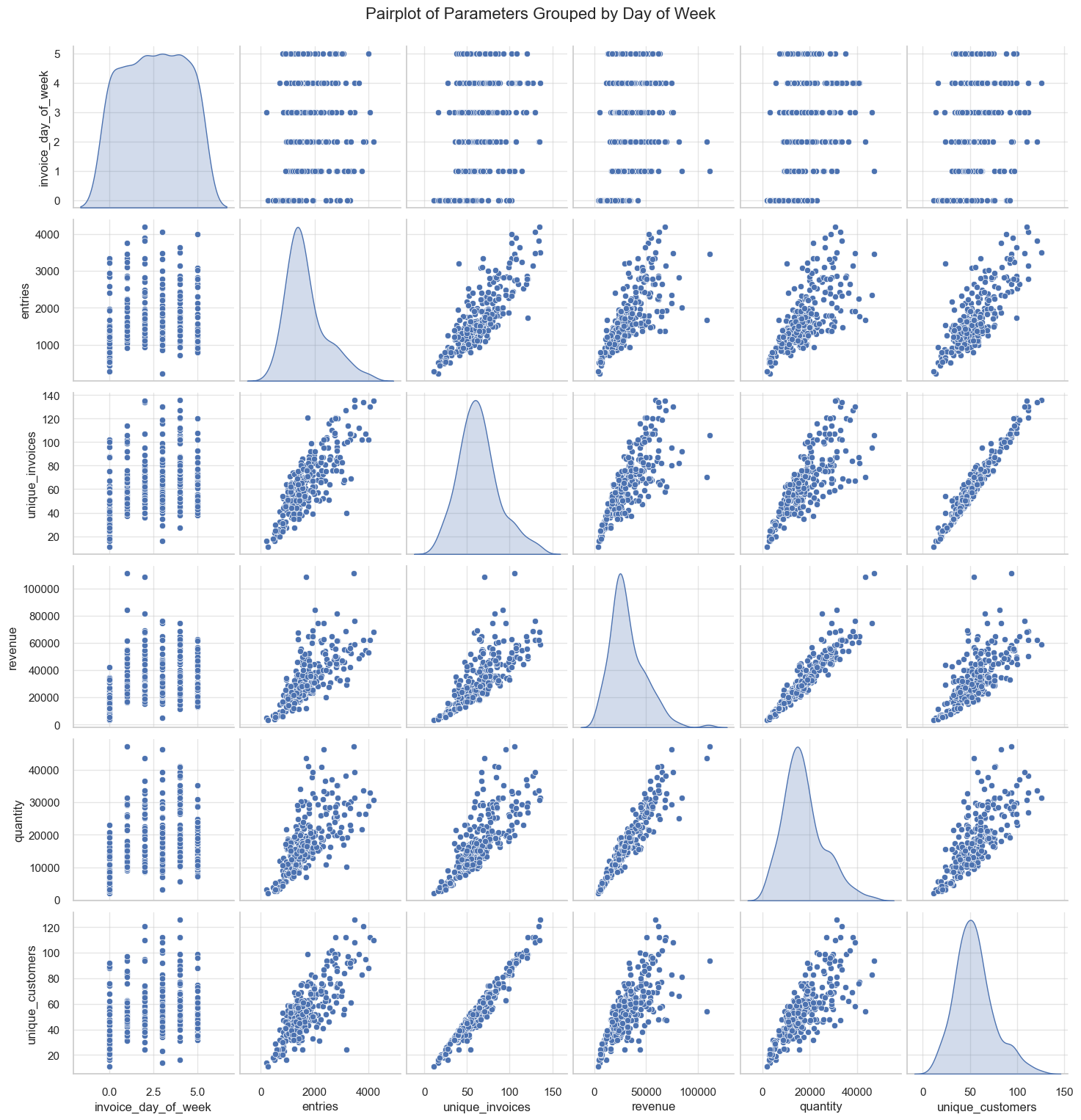

Parameters by day of the week

Distribution of invoices by week

Parameters change dynamics by month

boxplots function for this purpose.plot_totals_distribution function for this purpose.While the core of our project is focused on Product Range Analysis, studying additional parameters such as unique customers by month or the correlation between average invoice revenue and day of the week is not central to our primary goal. However, these extra analyses are not highly time-consuming and may reveal valuable insights that contribute to a more comprehensive understanding of sales patterns.

When making decisions about removing irrelevant data, we will ask ourselves several questions:

To conclude:

Since we need to study several parameters with similar approach, it’s reasonable to create a universal but adjustable set of tools for this purpose. The main tool will be a function called distribution_IQR. It will take our study parameters as input and provide graphs and calculations for data visualization and “cleaning” purposes (see the function description below for details).

For defining the limits of outliers in this function we will use “1.5*IQR approach” (whiskers of the boxplot).

But we won’t do it blindly, for instance we will use the “percentile approach” as well when reasonable (since not all parameters can be treated same way). A percentile_outliers function is built for this purpose.

An additional get_sample_size function will serve us for quicker plotting of large datasets, where full resolution is not necessary.

The plot_totals_distribution function is designed for quick calculation and visualization of either or both distributions and totals for selected parameters, allowing for the display of random, best, or worst performers.

Thanks to previous projects, two of these functions are already in the workpiece, the only thing that currently remains is minor adjustments.

Function: get_sample_size

def get_sample_size(df, target_size=10000, min_sample_size=0.01, max_sample_size=1):

"""

The function calculates optimal fracion of data to reduce DataFrame size.

It would be applied for quicker plotting of large datasets, where full resolution is not needed.

As input this function takes:

- df (DataFrame): the DataFrame to be reduced if needed.

- target_size (int): desired sample size (default - 10000)

- min_sample_size (float): minimum sampling fraction (default - 0.01, which means 1% of the df)*

- max_sample_size (float): maximum sampling fraction (default - 1, which means 100% of the df)

Output:

- float: sampling fraction between min and max, or 1 if df is smaller than target_size

----------------

Note: A target_size in thousands typically provides a sufficient representation of the overall data distribution for most plotting purposes.

However, accuracy may vary based on data complexity. A higher target_size results in slower graph plotting, but more reliable outcomes.

----------------

"""

current_size = len(df)

if current_size <= target_size:

return 1 # no sampling needed

sample_size = target_size / current_size

return max(min(sample_size, max_sample_size), min_sample_size)Function: distribution_IQR

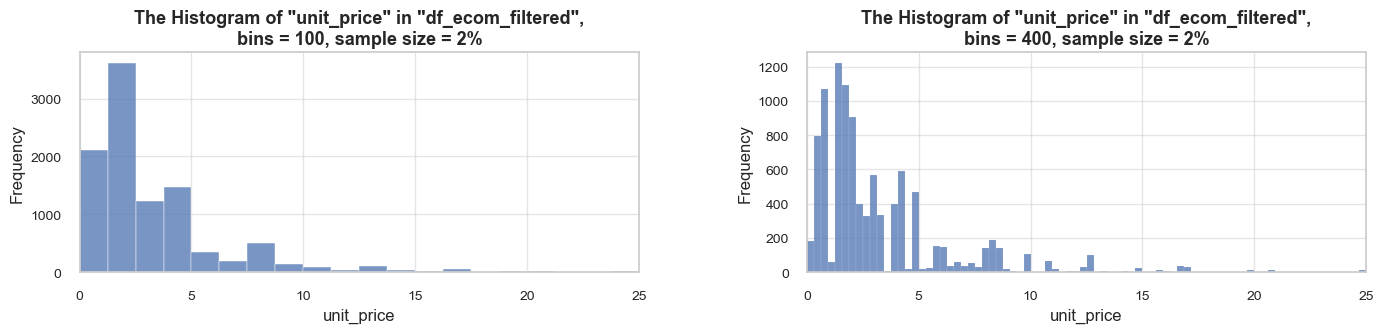

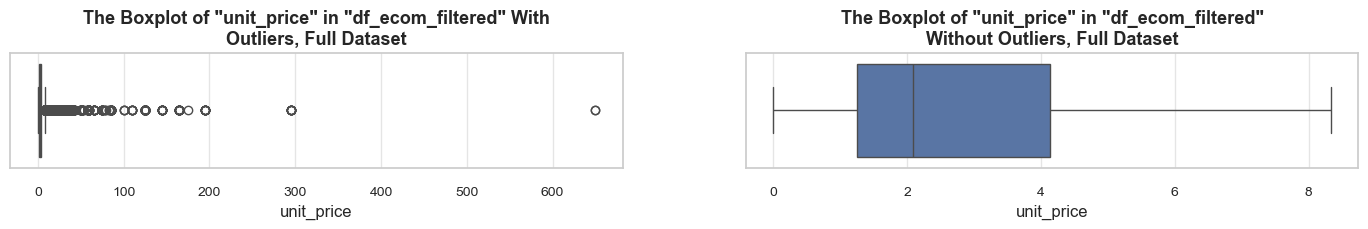

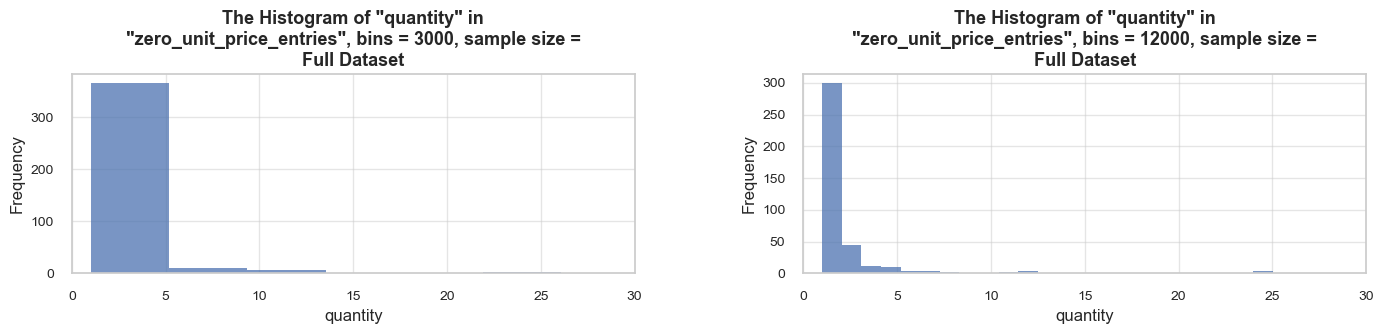

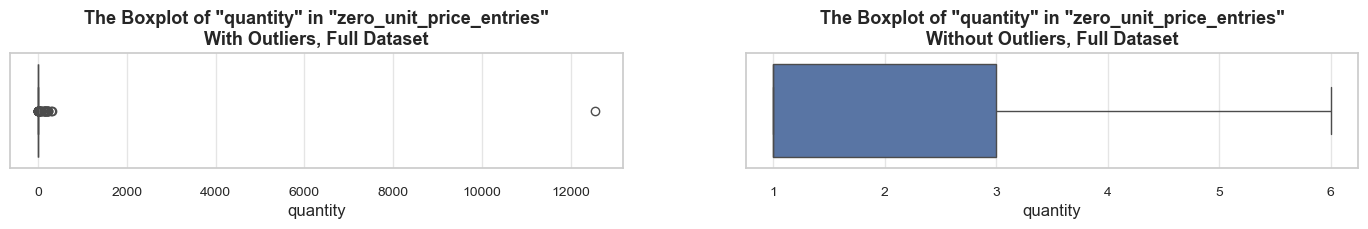

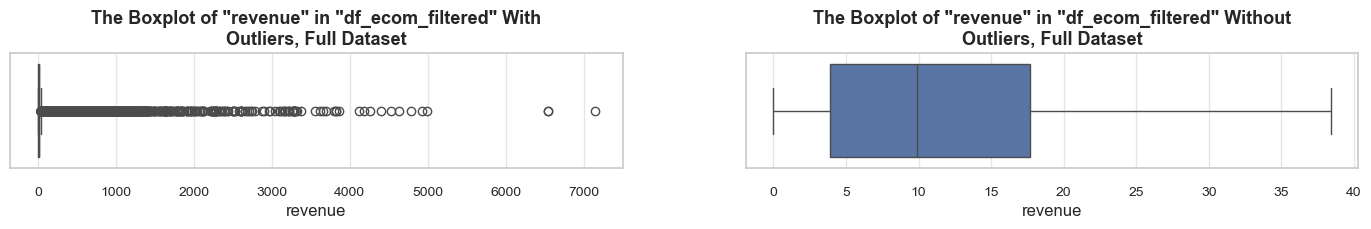

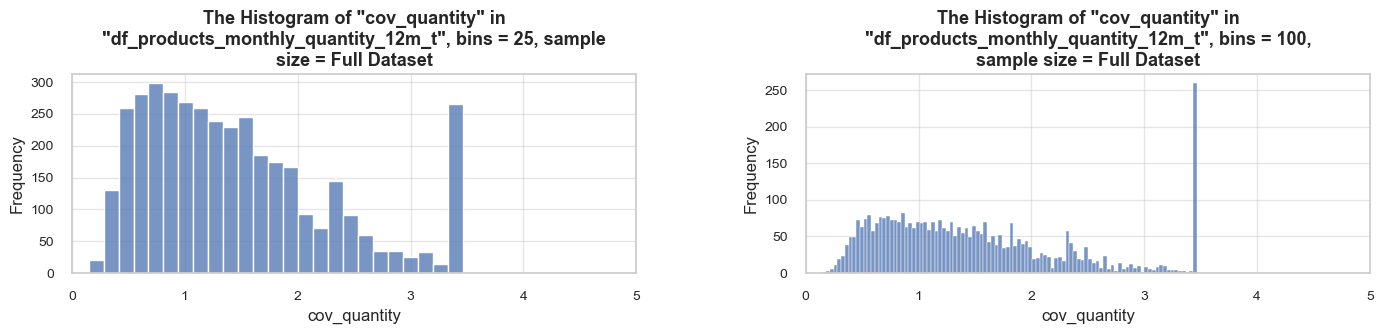

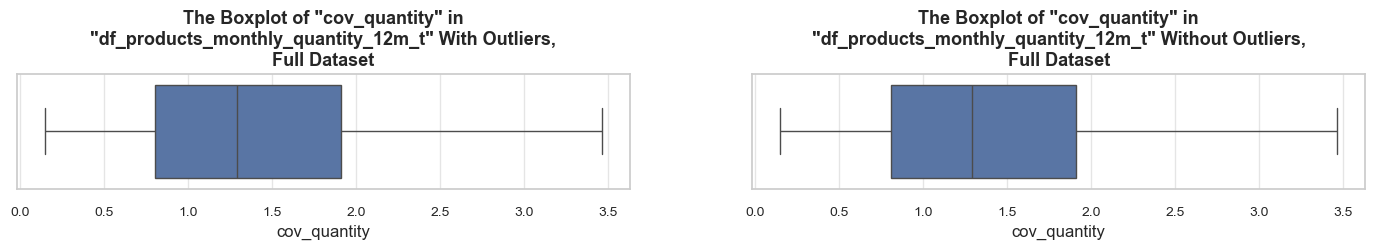

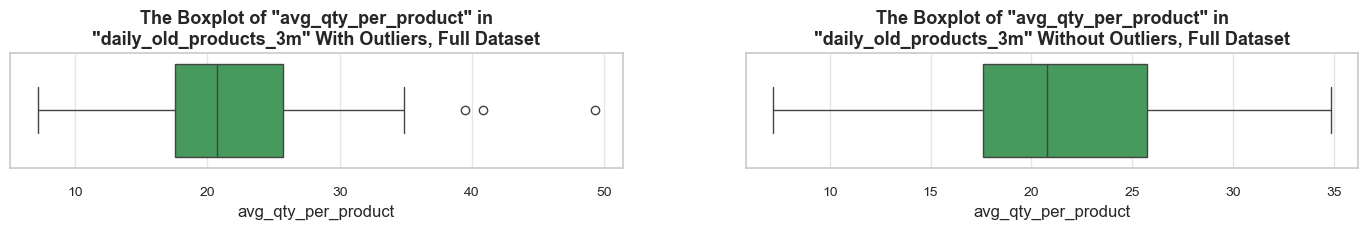

def distribution_IQR(df, parameter, x_limits=None, title_extension='', bins=[50, 100], outliers_info=True, speed_up_plotting=True, target_sample=10000, frame_len=50):

"""

The function analyzes the distribution of a specified DataFrame column using discriptive statistics, histograms and boxplots.

As input this function takes:

- df: the DataFrame containing the data to be analyzed.

- parameter (str): the column of the DataFrame to be analyzed.

- x_limits (list of float, optional): the x-axis limits for the histogram. If None, limits are set automatically. Default is None.

- title_extension (str, optional): additional text to append to the summary and plot titles. Default - empty string.

- bins (list of int, optional): list of bin numbers for histograms. Default - [50, 100].

- outliers_info (bool, optional): whether to display summary statistics and information on outliers. Default - True.

- speed_up_plotting (bool, optional): whether to speed up plotting by using a sample data slice of the DataFrame instead of the full DataFrame.

This option can significantly reduce plotting time for large datasets (tens of thousands of rows or more) when full resolution is not necessary.

Note that using a sample may slightly reduce the accuracy of the visualization, but is often sufficient for exploratory analysis. Default - True.

- target_sample (int, optional): the desired sample size when 'speed_up_plotting' is True. This parameter is passed to the get_sample_size function

to determine the appropriate sampling fraction. A larger 'target_sample' will result in a more accuracy of the visualization but slower plotting.

Default - 10000.

- frame_len (int, optional): the length of frame of printed outputs. Default - 50.

As output the function presents:

- Displays several histograms with set bin numbers.

- Displays two boxplots: the first with outliers included, and the second with outliers excluded.

- Provides main descriptive statistics for the specified parameter.

- Provides the upper and lower limits of outliers (if 'outliers_info' is set to True).

"""

# retrieving the name of the data slice

df_name = get_df_name(df) if get_df_name(df) != "name not found" else "the DataFrame"

# adjusting the title extension

if title_extension:

title_extension = f' {title_extension}'

# plotting histograms of the parameter distribution for each bin number

if speed_up_plotting:

frac = get_sample_size(df, target_size=target_sample)

if frac != 1:

df_sampled = df.sample(frac=frac, replace=False, random_state=7) # ensuring consistency across runs and preventing multiple sampling of the same row.

dataset_size = f'{frac*100:.0f}%'

print(f'\n\033[1mNote\033[0m: A sample data slice {dataset_size} of "{df_name}" was used for histogram plotting instead of the full DataFrame.\n'

f'This significantly reduced plotting time for the large dataset. '

f'The accuracy of the visualization might be slightly reduced, '

f'meanwhile it should be sufficient for exploratory analysis.\n')

else:

df_sampled = df

dataset_size = 'Full Dataset'

else:

dataset_size = 'Full Dataset'

df_sampled = df

if not isinstance(bins, list): # addressing the case of only one integer bins number (creating a list of 1 integer, for proper processing later in the code)

try:

bins = [int(bins)] # convert bins to int and create a list

except:

print("Bins is not a list or integer")

if len(bins) == 2:

fig, axes = plt.subplots(1, 2, figsize=(14, 3.5))

for i in [0, 1]:

sns.histplot(df_sampled[parameter], bins=bins[i], ax=axes[i])

title = f'The Histogram of "{parameter}" in "{df_name}"{title_extension}, bins = {bins[i]}, sample size = {dataset_size}'

wrapped_title = wrap_text(title, 55) # adjusting title width when it's necessary

axes[i].set_title(wrapped_title, fontsize=13, fontweight ='bold')

axes[i].set_xlabel(parameter, fontsize=12)

axes[i].set_ylabel('Frequency', fontsize=12)

axes[i].tick_params(labelsize=10)

# set manual xlim if it's provided

if x_limits is not None:

axes[i].set_xlim(x_limits)

plt.tight_layout()

plt.subplots_adjust(wspace=0.3, hspace=0.2)

plt.show()

else:

for i in bins:

plt.figure(figsize=(6, 3))

sns.histplot(df_sampled[parameter], bins=i)

title = f'The Histogram of "{parameter}" in "{df_name}"{title_extension}, bins={i}, sample size = {dataset_size}'

wrapped_title = wrap_text(title, 55) # adjusting title width when it's necessary

plt.title(wrapped_title, fontsize=13, fontweight ='bold')

plt.xlabel(parameter, fontsize=12)

plt.ylabel('Frequency', fontsize=12)

plt.tick_params(labelsize=10)

# set manual xlim if it's provided

if x_limits is not None:

plt.xlim(x_limits)

plt.show()

print('\n')

# plotting a boxplot of the parameter distribution

fig, axes = plt.subplots(1, 2, figsize=(17.4, 1.5))

for i in [0, 1]:

sns.boxplot(x=df[parameter], showfliers=(True if i == 0 else False), ax=axes[i])

title = f'The Boxplot of "{parameter}" in "{df_name}"{title_extension} {"With Outliers" if i == 0 else "Without Outliers"}, Full Dataset'

wrapped_title = wrap_text(title, 55) # adjusting title width when it's necessary

axes[i].set_title(wrapped_title, fontsize=13, fontweight='bold')

axes[i].set_xlabel(parameter, fontsize=12)

axes[i].tick_params(labelsize=10)

plt.subplots_adjust(wspace=0.2, hspace=0.2)

plt.show()

print('\n')

# calculating and displaying descriptive statistics of the parameter and a summary about its distribution skewness

print('='*frame_len)

display(Markdown(f'**Statistics on `{parameter}` in `{df_name}`{title_extension}**\n'))

print(f'{df[parameter].describe()}')

#print('Median:', round(df[parameter].median(),1)) #may be redundant, as describe() method already provides 50% value

print('-'*frame_len)

# defining skewness

skewness = df[parameter].skew()

abs_skewness = abs(skewness)

if abs_skewness < 0.5:

skewness_explanation = '\033[1;32mslightly skewed\033[0m' # green

elif abs_skewness < 1:

skewness_explanation = '\033[1;33mmoderately skewed\033[0m' # yellow

elif abs_skewness < 5:

skewness_explanation = '\033[1;31mhighly skewed\033[0m' # red

else:

skewness_explanation = '\033[1;31;2mextremely skewed\033[0m' # dark red

direction = 'right' if skewness > 0 else 'left'

print(f'The distribution is {skewness_explanation} to the {direction} \n(skewness: {skewness:.1f})')

print(f'\n\033[1mNote\033[0m: outliers affect skewness calculation')

# calculating and displaying descriptive statistics and information on outliers

if outliers_info:

Q1 = round(df[parameter].quantile(0.25))

Q3 = round(df[parameter].quantile(0.75))

IQR = Q3 - Q1

min_iqr = Q1 - round(1.5 * IQR)

max_iqr = Q3 + round(1.5 * IQR)

print('-'*frame_len)

print('Min border:', min_iqr)

print('Max border:', max_iqr)

print('-'*frame_len)

total_count = len(df[parameter])

outliers_count = len(df[(df[parameter] < min_iqr) | (df[parameter] > max_iqr)])

outliers_over_max_iqr_count = len(df[df[parameter] > max_iqr])

outlier_percentage = round(outliers_count / total_count * 100, 1)

outlier_over_max_iqr_percentage = round(outliers_over_max_iqr_count/ total_count * 100, 1)

if min_iqr < 0:

print(f'The outliers are considered to be values above {max_iqr}')

print(f'We have {outliers_over_max_iqr_count} values that we can consider outliers')

print(f'Which makes {outlier_over_max_iqr_percentage}% of the total "{parameter}" data')

else:

print(f'The outliers are considered to be values below {min_iqr} and above {max_iqr}')

print(f'We have {outliers_count} values that we can consider outliers')

print(f'Which makes {outlier_percentage}% of the total "{parameter}" data')

print('='*frame_len) Function: percentile_outliers

def percentile_outliers(df, parameter, title_extension='', lower_percentile=3, upper_percentile=97, frame_len=70, print_limits=False):

"""

The function identifies outliers in a DataFrame column using percentile limits.

As input this function takes:

- df: the DataFrame containing the data to be analyzed.

- parameter (str): the column of the DataFrame to be analyzed.

- title_extension (str, optional): additional text to append to the plot titles. Default - empty string.

- lower percentile (int, float): the lower percentile threshold. Default - 3)

- upper percentile (int, float): the upper percentile threshold. Default - 97)

- frame_len (int, optional): the length of frame of printed outputs. Default - 70.

- print_limits (bool, optional): whether to print the limits dictionary. Default - False.

As output the function presents:

- upper and lower limits of outliers and their share of the innitial DataFrame

- the function creates the dictionary with limits names and their values and updates the global namespace respectively.

"""

# adjusting output frame width

if print_limits:

frame_len = 110

# adjusting the title extension

if title_extension:

title_extension = f' {title_extension}'

# calculating the lower and upper percentile limits

lower_limit = round(np.percentile(df[parameter], lower_percentile), 2)

upper_limit = round(np.percentile(df[parameter], upper_percentile), 2)

# identifying outliers

outliers = df[(df[parameter] < lower_limit) | (df[parameter] > upper_limit)]

outliers_count = len(outliers)

total_count = len(df[parameter])

outlier_percentage = round(outliers_count / total_count * 100, 1)

# displaying data on outliers

print('='*frame_len)

display(Markdown(f'**Data on `{parameter}` outliers {title_extension} based on the "percentile approach"**\n'))

print(f'The outliers are considered to be values below {lower_limit} and above {upper_limit}')

print(f'We have {outliers_count} values that we can consider outliers')

print(f'Which makes {outlier_percentage}% of the total "{parameter}" data')

# retrieving the df name

df_name = get_df_name(df) if get_df_name(df) != "name not found" else "df"

# creating dynamic variable names

lower_limit_name = f'{df_name}_{parameter}_lower_limit'

upper_limit_name = f'{df_name}_{parameter}_upper_limit'

# creating a limits dictionary

limits = {lower_limit_name: lower_limit, upper_limit_name: upper_limit} # we can refer to them in further analyses, if needed

# updating global namespace with the limits

globals().update(limits)

# printing limits, if required

if print_limits:

print('-'*frame_len)

print(f'Limits: {limits}')

print('='*frame_len) Function: plot_totals_distribution

def plot_totals_distribution(df, parameter_column, value_column, n_items=20, sample_type='head', random_state=None,

show_outliers=False, fig_height=500, fig_width=1000, color_palette=None,

sort_ascending=False, title_start=True, title_extension='', plot_totals=True, plot_distribution=True, consistent_colors=False):

"""

This function calculates and displays the following:

- A horizontal bar chart of the specified items by total value (optional).

- Box plots showing the distribution of values for each specified item (optional).

As input the function takes:

- df (DataFrame): the data to be analyzed.

- parameter_column (str): name of the column containing the names of parameters (e.g., product names).

- value_column (str): name of the column containing the values to be analyzed (e.g., 'quantity').

- n_items (int, optional): number of items to display. Default - 20.

- sample_type (str, optional): type of sampling to use. Options are 'sample', 'head', or 'tail'. Default - 'head'.

- random_state (int, optional): controls the randomness of sample selection. Default - None.

- show_outliers (bool, optional): whether to display outliers in the box plots. Default - False.

- fig_height (int, optional): height of the figure in pixels. Default - 600.

- fig_width (int, optional): width of the figure in pixels. Default - 1150.

- color_palette (list, optional): list of colors to use for the plots.

If None, uses px.colors.qualitative.Pastel. Default - None.

- sort_ascending (bool, optional): if True, sorts the displayed parameters in ascending order based on the value column. Sorting is not applied in case of random sampling (when 'sample_type' = 'sample'). Default - False.

- title_start (bool, optional): whether to display information about sampling type in the beginning of a title. Default - True.

- title_extension (str, optional): additional text to append to the plot title. Default - empty string.

- plot_totals (bool, optional): if True, plots the totals bar chart. If False, only plots the distribution (if enabled). Default - True.

- plot_distribution (bool, optional): if True, plots the distribution alongside totals. If False, only plots totals. Default - True.

- consistent_colors (bool, optional): if True, uses the same colors for the same parameter values across different runs. Default - False.

As output the function presents:

- A plotly figure containing one or both visualizations side by side.

"""

# handling error in case of wrong/lacking `parameter_column` or `value_column`

if parameter_column not in df.columns or value_column not in df.columns:

raise ValueError(f'Columns {parameter_column} and/or {value_column} not found in {get_df_name(df)}.')

# defining sampling methods and messages

sampling_methods = {

'sample': lambda df: df.sample(n=min(n_items, len(df)), random_state=random_state),

'head': lambda df: df.nlargest(min(n_items, len(df)), value_column),

'tail': lambda df: df.nsmallest(min(n_items, len(df)), value_column)}

sampling_messages = {

'sample': 'Random',

'head': 'Top',

'tail': 'Bottom'}

# setting default color pallet

if color_palette is None:

color_palette = px.colors.qualitative.Pastel

# creating a color mapping if consistent_colors is True

color_mapping = None

if consistent_colors:

all_parameters = df[parameter_column].unique()

color_mapping = {

param: color_palette[i % len(color_palette)] # reusing colors from the palette if there are more parameters than colors

for i, param in enumerate(all_parameters)}

# grouping data by parameter

df_grouped = df.groupby(parameter_column)[value_column].sum().reset_index()

# applying sampling method

selected_parameters = sampling_methods[sample_type](df_grouped)

# applying sorting if needed (except for random sampling)

if sample_type != 'sample':

#selected_parameters = selected_parameters.sort_values(value_column, ascending=sort_ascending)

selected_parameters = selected_parameters.sort_values(value_column, ascending=not sort_ascending) # reversing the sorting direction (without reversing, sort_ascending=True results in bigger bars at the top of a Totals plot, which is counterintuitive)

# setting the subplot

if plot_totals and plot_distribution:

fig = make_subplots(

rows=1, cols=2,

subplot_titles=(f'<b>\"{value_column}\" Totals</b>', f'<b>\"{value_column}\" Distribution</b>'),

horizontal_spacing=0.05)

elif plot_totals:

fig = make_subplots(rows=1, cols=1, subplot_titles=(f'<b>\"{value_column}\" Totals</b>',))

elif plot_distribution:

fig = make_subplots(rows=1, cols=1, subplot_titles=(f'<b>\"{value_column}\" Distribution</b>',))

else:

raise ValueError('At least one of `plot_totals` or `plot_distribution` must be True.')

# plotting bar chart of totals (left subplot)

if plot_totals:

# determining the colors to use

if consistent_colors:

bar_colors = [color_mapping[param] for param in selected_parameters[parameter_column]]

else:

bar_colors = [color_palette[i % len(color_palette)] for i in range(len(selected_parameters))] # reusing colors from the palette if there are more parameters than colors

fig.add_trace(

go.Bar(

x=selected_parameters[value_column],

y=selected_parameters[parameter_column],

orientation='h',

text=[EngFormatter(places=1)(x) for x in selected_parameters[value_column]],

textposition='inside',

marker_color=bar_colors,

showlegend=False),

row=1, col=1 if plot_distribution else 1)

# plotting box plot chart of totals (right subplot)

if plot_distribution:

selected_parameters_list = selected_parameters[parameter_column].tolist()

for parameter_id, parameter_value in enumerate(selected_parameters_list):

parameter_data = df[df[parameter_column] == parameter_value]

# determining outliers and bounds for future boxplots

if not show_outliers:

q1 = parameter_data[value_column].quantile(0.25)

q3 = parameter_data[value_column].quantile(0.75)

iqr = q3 - q1

parameter_data = parameter_data[

(parameter_data[value_column] >= q1 - 1.5 * iqr) &

(parameter_data[value_column] <= q3 + 1.5 * iqr)]

# determining the colors to use

if consistent_colors:

box_color = color_mapping[parameter_value]

else:

box_color = color_palette[parameter_id % len(color_palette)] # reusing colors from the palette if there are more parameters than colors

# adding a box plot for this item

fig.add_trace(

go.Box(

x=parameter_data[value_column],

y=[parameter_value] * len(parameter_data),

name=parameter_value,

orientation='h',

showlegend=False,

marker_color=box_color,

boxpoints='outliers' if show_outliers else False),

row=1, col=2 if plot_totals else 1)

# adjusting the appearance

sampling_message = f'{sampling_messages[sample_type]} {n_items}'

if title_start:

title_start = sampling_message

else:

title_start = ''

title_text = f'<b>{title_start} \"{value_column}\" by \"{parameter_column}\"{" " + title_extension if title_extension else ""}: {"Totals and Distribution" if plot_totals and plot_distribution else "Totals" if plot_totals else "Distribution"}</b>'

fig.update_layout(

height=fig_height,

width=fig_width,

title={

'text': title_text,

'font_size': 19, 'y': 0.95, 'x': 0.5})

if plot_totals:

fig.update_xaxes(title_text=value_column, row=1, col=1)

if plot_distribution:

fig.update_xaxes(title_text=value_column, title_font=dict(size=14), row=1, col=2 if plot_totals else 1)

fig.update_yaxes(title_text=parameter_column, title_font=dict(size=14), row=1, col=1)

if plot_totals:

fig.update_yaxes(title_text='', showticklabels=False, row=1, col=2)

else:

fig.update_yaxes(title_text=parameter_column, row=1, col=1)

return fig.show();# checking outliers with IQR approach + descriptive statistics

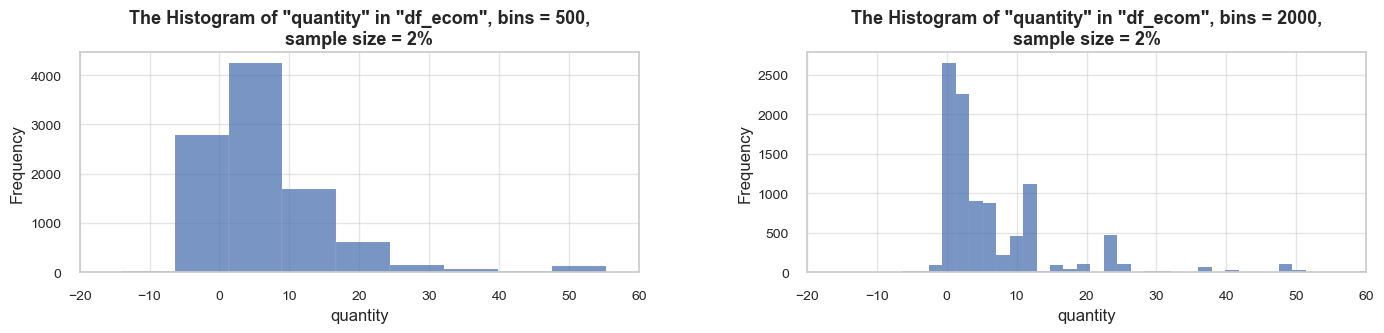

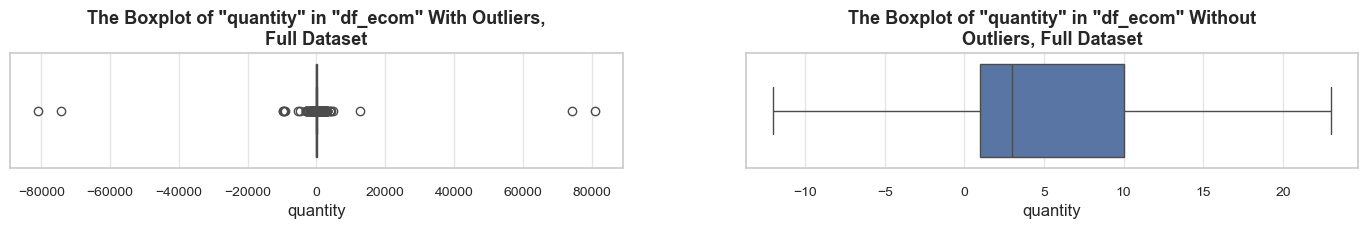

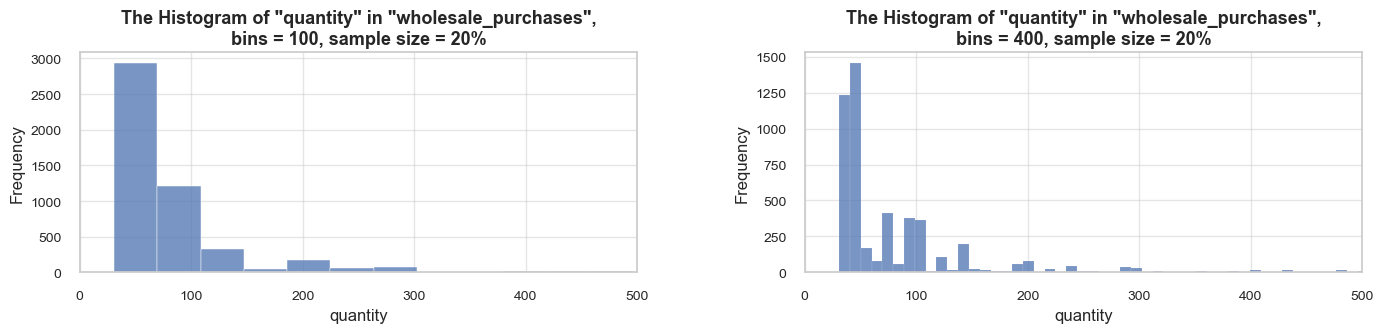

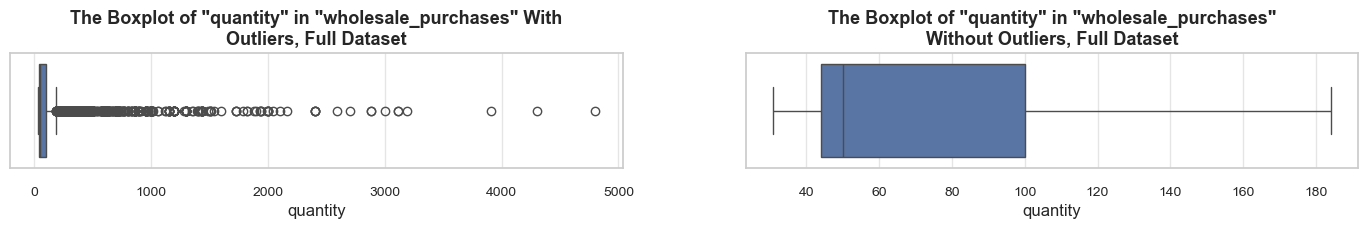

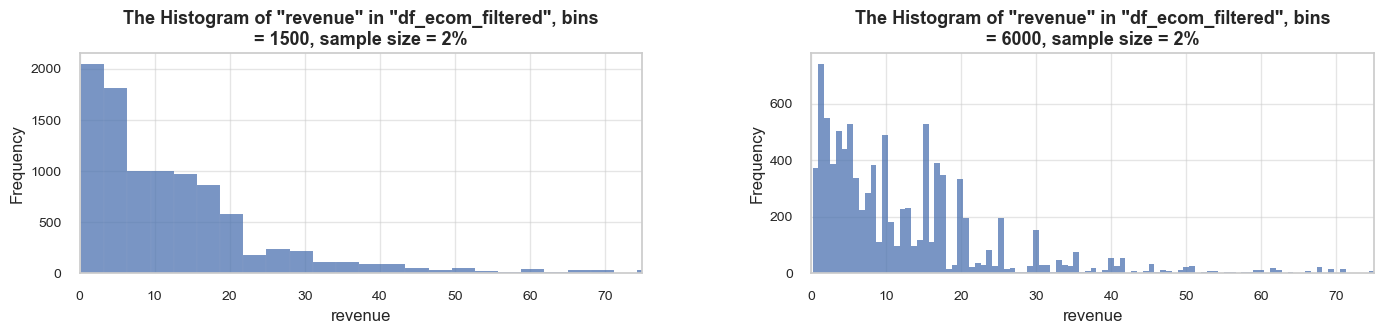

distribution_IQR(df=df_ecom, parameter='quantity', title_extension='', x_limits=[-20, 60], bins=[500, 2000], speed_up_plotting=True, outliers_info=True)Note: A sample data slice 2% of "df_ecom" was used for histogram plotting instead of the full DataFrame.

This significantly reduced plotting time for the large dataset. The accuracy of the visualization might be slightly reduced, meanwhile it should be sufficient for exploratory analysis.

==================================================Statistics on quantity in df_ecom

count 535185.00 mean 9.67 std 219.06 min -80995.00 25% 1.00 50% 3.00 75% 10.00 max 80995.00 Name: quantity, dtype: float64 -------------------------------------------------- The distribution is slightly skewed to the left (skewness: -0.3) Note: outliers affect skewness calculation -------------------------------------------------- Min border: -13 Max border: 24 -------------------------------------------------- The outliers are considered to be values above 24 We have 32411 values that we can consider outliers Which makes 6.1% of the total "quantity" data ==================================================

# let's check descriptive statistics of quantity by product

products_quantity_ranges = df_ecom.groupby('stock_code')['quantity']

#products_quantity_var = products_quantity_ranges.var().mean()

#products_quantity_std = products_quantity_ranges.std().mean()

products_quantity_cov = products_quantity_ranges.apply(

lambda x: (x.std() / x.mean() * 100) if x.mean() != 0 else 0)\

.mean()

#print(f'\033[1mAverage variation of a stock code quantity:\033[0m {products_quantity_var:.0f}')